Workshop

Deadline for applications to the workshop has ended

The application deadline was July 26, 2021, 11pm CEST and has now passed.

Fear not! We will be making all the material free as the workshop progresses so

you can follow along. All technical talks will be live streamed recorded and

all workshops will be recorded. We will post links to relevant content in due

course.This website site contains information, documentation, timetables, and more for

participants of the Unikraft Summer of Code 2021 (USoC21). If you are not

participating in USoC21 feel free to use this site and its resources to learn

how to build Unikraft unikernels.

Unikraft Summer of Code is a free two week virtual Unikernel and library

Operating Systems workshop held by members of the Unikraft community including

professors, lecturers, PhD and MSci students from:

To learn more about Unikraft, please visit the Unikraft Project

Website, visit the main documentation website or checkout the GitHub project.

In this workshop, you will learn about how to build Unikraft unikernels,

including zero-to-hero workshops on how to get started using Unikraft. As the

week progresses, we will dive into more in-depth topics of Unikraft, including

programming structures and architectures, how it is organized, methodologies for

porting libraries and applications to Unikraft and more!

The workshop will be hands-on and will take place for 10 days, between August 23

and September 3, 2021, 4pm-8pm CEST. And an 8 hours hackathon on September 4,

2021, 9am-5pm CEST. It will be online and in English. Topics include building

unikernels, benchmarking, debugging, porting applications, virtualization and

platform specifics.

USoC21 and Unikraft are supported by the UNICORE Project, EU Horizon 2020 grant agreement No 825377.

1 - Schedule

Unikraft Summer of Code 2021 (USoC'21) consists of 10 sessions in 10 days and a hackathon.

Each session is 4 hours long and consists of practical demos and then exercises for participants.

The hackathon is an 8 hours event where you’ll have different tasks to enable, test, fix, evaluate and improve applications and libraries with Unikraft.

The complete schedule for USoC'21 is (all times in CEST - Central European Summer Time):

| Date | Interval | Activity | TA(s) |

|---|

| Mon, 23.08.2021 | 3:30pm-4pm

4pm-8pm | Opening Ceremony

Session 01: Baby Steps | RazvanD, Alex, CristiV

Alex, CristiV, Vlad |

| Tue, 24.08.2021 | 4pm-8pm | Session 02: Behind the Scenes | RazvanD, Costi |

| Wed, 25.08.2021 | 4pm-4:30pm

4:30pm-8pm | Tech Talk: Debugging and Tracing in Unikraft

Session 03: Debugging in Unikraft | Simon

CristiV, RazvanD |

| Thu, 26.08.2021 | 4pm-8pm | Session 04: Complex Applications | Costi, Vlad |

| Fri, 27.08.2021 | 4pm-4:30pm

4:30pm-8pm | Tech Talk: Virtual Memory in Unikraft

Session 05: Contributing to Unikraft | Ștefan

Vlad, RazvanD |

| Mon, 30.08.2021 | 4pm-8pm | Session 06: Testing Unikraft | CristiB, Alex |

| Tue, 31.08.2021 | 4pm-4:30pm

4:30pm-8pm | Tech Talk: Memory Deduplication with Unikraft

Session 07: Syscall Shim | Gaulthier

RazvanD, Alex |

| Wed, 01.09.2021 | 4pm-8pm | Session 08: Basic App Porting | Cezar, RazvanD |

| Thu, 02.09.2021 | 4pm-4:30pm

4:30pm-8pm | Tech Talk: Retrofitting Isolation into Unikraft with FlexOS

Session 09: Advanced App Porting | Hugo

Vlad, Cezar |

| Fri, 03.09.2021 | 4pm-8pm | Session 10: High Performance | Alex, RazvanD |

| Sat, 04.09.2021 | 9am-5pm | Hackathon | Dragoș, Gabi, Sergiu, Florin

Laurențiu, Cătălin, Vlad, Alex |

2 - People

Program Chairs and Project Coordinators

Teaching Assistants (TAs)

You’ll see these kind people during the workshop to help answer questions and

work through problems.

Contact

The fastest way to get in contact with anyone in this workshop regarding the

workshop is to join the

Unikraft Community Discord.

3 - Session 01: Baby Steps

In this session we are going to understand the basic layout of the Unikraft working directory, its environment variables, as well as what the most common Unikraft specific files mean.

We are also going to take a look at how we can build basic applications and how we can extend their functionality and support by adding ported external libraries.

Before everything, let’s take a bird’s eye view of what Unikraft is and what we can do with it.

Unikraft is a unikernel SDK, meaning it offers you the blocks (source code, configuration and build system, runtime support) to build and run unikernels.

A unikernel is a single image file that can be loaded and run as a separate running instance, most often a virtual machine.

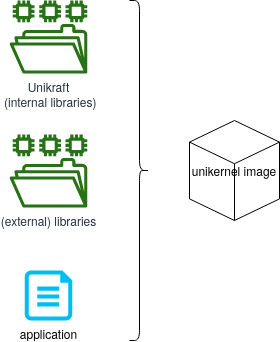

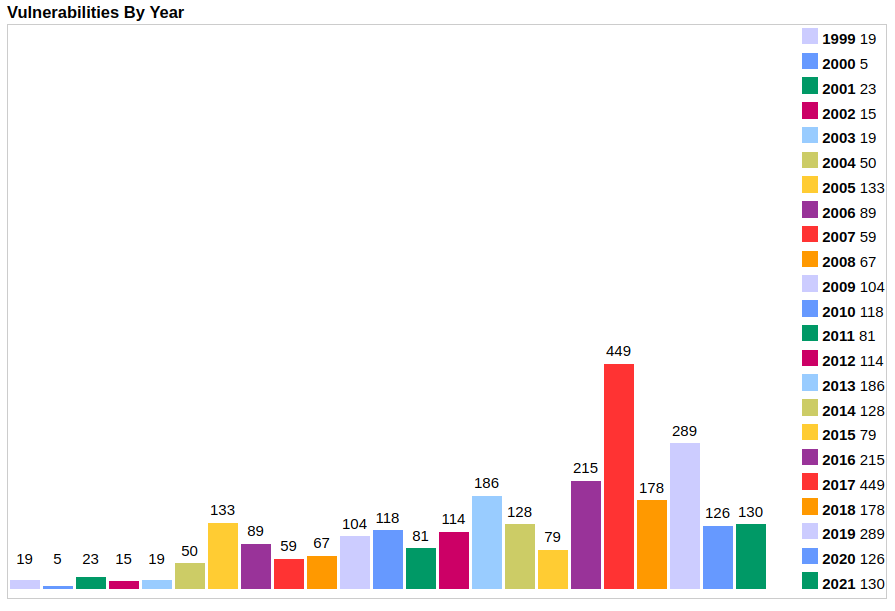

Summarily, Unikraft components are shown in the image below:

Unikraft is the core component, consisting of core / internal libraries, the build system, and platform and architecture code.

It is the basis of any unikernel image.

It is located in the main Unikraft repository.

Libraries are additional software components that will be linked with Unikraft for the final image.

There are multiple supported libraries.

Each unikernel image is using its specific libraries.

Libraries are also called external libraries as they sit outside the main Unikraft repository.

Libraries are typically common libraries (such as OpenSSL or LWIP) that have been ported on top of Unikraft.

They are located in specialized repositories in the Unikraft organization, those whose names start with lib-.

Application is the actual application code.

It typically provides the main() function (or equivalent) and is reliant on Unikraft and external libraries.

Applications that have been ported on top of Unikraft are located in repositories in the Unikraft organization, those whose names start with app-.

An important role of the core Unikraft component is providing support for different platforms and architectures.

A platform is the virtualization / runtime environment used to run the resulting unikernel image.

An architecture details the CPU and memory specifics that will run the resulting image.

As this is a rather complicated setup, a companion tool (kraft) was designed and implemented to provide the interface for configuring, building and running unikernel images based on Unikraft.

The recommended way of building and running Unikraft is via kraft.

We are going to build the helloworld application and the httpreply application using kraft.

We are also going to use the lower-level configuration and build system (based on Kconfig and Makefile) to get a grasp of how everything works.

The lower-level system will be detailed further in session 02: Behind the Scenes.

Demos

00. Manual kraft Installation

Let’s start with installing kraft (and validating the installation).

First of all, make sure you have all the dependencies installed:

$ sudo apt-get install -y --no-install-recommends build-essential \

libncurses-dev libyaml-dev flex git wget socat bison \

unzip uuid-runtime

We begin by cloning the kraft repository on our machine:

git clone https://github.com/unikraft/kraft.git

Now, all we have to do is enter this directory and run the setup installer:

$ cd kraft

$ pip install --user -e .

This will install kraft for the local user.

After installing or updating kraft, the first step is to download / update the software components available for building unikernel images.

For this, run:

$ kraft list update

It’s very likely that running the command above will result in the following error:

GitHub rate limit exceeded. You can tell kraft to use a personal access token by setting the UK_KRAFT_GITHUB_TOKEN environmental variable.

If this is the case, first create a GitHub personal access token by following these instructions.

Then, use the following command:

$ UK_KRAFT_GITHUB_TOKEN=<your_GitHub_token_here> kraft list update

After this is done, you can get a list of all components that are available for use with kraft:

$ kraft list

UNIKRAFT VERSION RELEASED LAST CHECKED

unikraft 0.5 17 hours ago 18 Aug 21

PLATFORMS VERSION RELEASED LAST CHECKED

solo5 0.5 13 Jul 21 18 Aug 21

[...]

LIBRARIES VERSION RELEASED LAST CHECKED

newlib 0.5 5 days ago 18 Aug 21

pthreadpool 0.5 7 days ago 18 Aug 21

lwip 0.5 6 days ago 18 Aug 21

[...]

APPLICATIONS VERSION RELEASED LAST CHECKED

python3 0.4 29 Mar 21 18 Aug 21

helloworld 0.5 29 Mar 21 18 Aug 21

httpreply 0.5 13 Jul 21 18 Aug 21

[...]

So, with kraft we have an interface to configure, build and run unikernel images based on Unikraft core, (external) platforms, (external) libraries and applications.

By default, these are saved to ~/.unikraft/ directory, which is also the value of the UK_WORKDIR environment variable used by kraft.

This represents the working directory for all Unikraft components.

This is the usual layout of the ~/.unikraft/ directory:

|-- apps - This is where you would normally place existing app build

|-- archs - Here we place our custom arch's files

|-- libs - This is where the build system looks for external library pool sources

|-- plats - The files for our custom plats are placed here

`-- unikraft - The core source code of the Unikraft Unikernel

Apart from the general UK_WORKDIR environment variable that points to the overall directory, there are also environment variables available for the above subdirectories:

UK_ROOT - The directory for Unikraft's core source code [default: $UK_WORKDIR/unikraft]

UK_LIBS - The directory of all the external Unikraft libraries [default: $UK_WORKDIR/libs]

UK_APPS - The directory of all the template applications [default: $UK_WORKDIR/apps]

After successfully running the above commands, kraft is now installed on our system and we can get to building and running unikernels.

01. Building and Running the Helloworld Application

This is where the fun part begins - we get to build our first unikernel.

One Command to Rule Them All

kraft makes it easy to download, configure, build existing components into unikernel images and then run those images.

The kraft up command makes it easy to do that with one swoop.

Let’s do that for the helloworld application (listed with kraft list):

$ kraft up -t helloworld hello

100.00% :::::::::::::::::::::::::::::::::::::::: | 21 / 21 |: app/helloworld@0.5

[INFO ] Initialized new unikraft application: /home/razvan/hello

make: Entering directory '/home/razvan/.unikraft/unikraft'

[...]

#

# configuration written to /home/razvan/hello/.config

#

[...]

CC libkvmplat: trace.common.o

CC libkvmplat: traps.isr.o

CC libkvmplat: cpu_features.common.o

[...]

CC libnolibc: errno.o

CC libnolibc: stdio.o

CC libnolibc: ctype.o

[...]

LD hello_kvm-x86_64.ld.o

OBJCOPY hello_kvm-x86_64.o

LD hello_kvm-x86_64.dbg

SCSTRIP hello_kvm-x86_64

GZ hello_kvm-x86_64.gz

LN hello_kvm-x86_64.dbg.gdb.py

[...]

Successfully built unikernels:

=> build/hello_kvm-x86_64

=> build/hello_kvm-x86_64.dbg (with symbols)

[...]

To instantiate, use: kraft run

[...]

Starting VM...

[...]

Tethys 0.5.0~b8be82b

Hello world!

Arguments: "/home/razvan/hello/build/hello_kvm-x86_64" "console=ttyS0"

In the snippet above, we selected parts of the output showing what kraft does behind the scenes:

- It downloads the

helloworld application repository in the hello/ directory. - It configures the repository, resulting in a

.config file. - It builds the required components, resulting in the

build/hello_kvm-x86_64 unikernel image. - It runs the image, resulting in QEMU/KVM being run, with the “Hello world!” message getting printed.

All that magic is done using one command.

A closer inspection of the hello/ folder reveals it is a clone of the app-helloworld repository and it stores the resulting configuration file (.config) and resulting build folder (and images) (build/):

$ ls -Fa hello/

./ ../ build/ CODING_STYLE.md .config Config.uk CONTRIBUTING.md COPYING.md .git/ kraft.yaml main.c MAINTAINERS.md Makefile Makefile.uk monkey.h README.md

Once this is done, we can now run the resulting unikernel image any time we want by simply using kraft run:

$ cd hello/

$ kraft run

[...]

Tethys 0.5.0~b8be82b

Hello world!

Arguments: "/home/razvan/hello/build/hello_kvm-x86_64" "console=ttyS0"

Doing it Step-by-Step Using kraft

The above kraft up command seems like magic and it’s not very clear what’s really happening.

Let’s break that down into subcommands and really get a good grip of the configure, build and run process.

We will go through the same steps above, running a separate command for each step:

- Download / Initialize the helloworld appplication.

- Configure the application, resulting in a

.config file. - Build the required components, resulting in the

build/hello_kvm-x86_64 unikernel image. - Run the image, with the “Hello world!” message getting printed.

Initialize

First, let’s create a directory that will host the application.

We enter the demo/ directory of the current session and we create the 01-hello-world/ directory:

$ cd demo/

$ mkdir 01-hello-world

$ cd 01-hello-world/

Now, we initialize the application by using the template for the helloworld app and see that it’s populated with files belonging to the app:

$ kraft init -t helloworld

$ ls

CODING_STYLE.md Config.uk CONTRIBUTING.md COPYING.md kraft.yaml main.c MAINTAINERS.md Makefile Makefile.uk monkey.h README.md

The kraft.yaml file is the most important file.

It stores kraft-speficic configuration for the app and it’s used by kraft when configuring, building and running the application.

Other files are important as well, but they are used behind the scenes by kraft.

We will detail them later in the session and in session 02: Behind the Scenes.

A unikernel image may be targeted for multiple platforms and architectures.

The available platforms and applications are listed in the kraft.yaml file:

$ cat kraft.yaml

specification: '0.4'

unikraft: '0.5'

architectures:

x86_64: true

arm64: true

platforms:

linuxu: true

kvm: true

xen: true

In our case, we can target the x86_64 or arm64 architectures.

And we can target linuxu, kvm or xen platforms.

The simplest way to select the platform and architecture is by running kraft configure and then interactively use arrow keys to select the desired option:

$ kraft configure

[?] Which target would you like to configure?: 01-hello-world_linuxu-x86_64

> 01-hello-world_linuxu-x86_64

01-hello-world_kvm-x86_64

01-hello-world_xen-x86_64

01-hello-world_linuxu-arm64

01-hello-world_kvm-arm64

01-hello-world_xen-arm64

We have 6 options (2 architectures x 3 platforms).

Once we select one, the configuration will be updated.

The alternate way (non-interactive) is to pass arguments to kraft configure to select the desired platform and architecture.

For example, if we want to use x86_64 and KVM, we use:

$ kraft configure -p kvm -m x86_64

Build

Everything is set up now, all we have left to do is tell the build system to do its magic:

$ kraft build

[...]

Successfully built unikernels:

=> build/01-hello-world_kvm-x86_64

=> build/01-hello-world_kvm-x86_64.dbg (with symbols)

To instantiate, use: kraft run

This results in the creation of two unikernel image files:

build/01-hello-world_kvm-x86_64 - the main image filebuild/01-hello-world_kvm-x86_64.dbg - the image file with debug information (useful for debugging, duh!)

And that’s it! Our final unikernel binary is ready to be launched from the build/ directory.

Run

To run an already-built unikernel image, we use kraft run:

$ kraft run

[...]

Tethys 0.5.0~b8be82b

Hello world!

[...]

If we want to be more specific, we could use:

$ kraft run -p kvm -m x86_64

This command is useful in the case we have multiple images built (for differing platforms and architectures).

We can then select which one to run.

For example, we can use the commands below to configure, build and run a helloworld image for the linuxu platform.

kraft configure -p linuxu -m x86_64

kraft build

kraft run -p linuxu -m x86_64

You can now alter between running the linuxu and the kvm built images by using kraft run with the appropriate arguments.

More on kraft

Of course, this is the most basic way you can use kraft, but there are many other options.

To see every option kraft has to offer, you can simply type kraft -h.

If you want to know about a certain command, just follow it with the -h option.

For example, if I wanted to know more about the configure command, I would type kraft configure -h.

Manually Building the helloworld Application

Let’s now learn how to build the app manually, without kraft.

We won’t go into too much detail, this will be handled more thoroughly in session 02: Behind the Scenes.

The manual approach is more complicated (albeit giving you potentially more control) than kraft.

For most of the use cases (development, testing, evaluating, using) of Unikraft, we recommend you use kraft.

We will go through the same steps as above:

- Download / Initialize the helloworld application.

- Configure the application, resulting in a

.config file. - Build the required components, resulting in the

build/hello_kvm-x86_64 unikernel image. - Run the image, with the “Hello world!” message getting printed.

Initialize

First, get out of the current build’s directory and make a new one:

$ cd ../ && mkdir 01-hello-world-manual && cd 01-hello-world-manual

Now, clone the remote Git repository:

$ git clone https://github.com/unikraft/app-helloworld.git .

$ ls

CODING_STYLE.md Config.uk CONTRIBUTING.md COPYING.md kraft.yaml main.c MAINTAINERS.md Makefile Makefile.uk monkey.h README.md

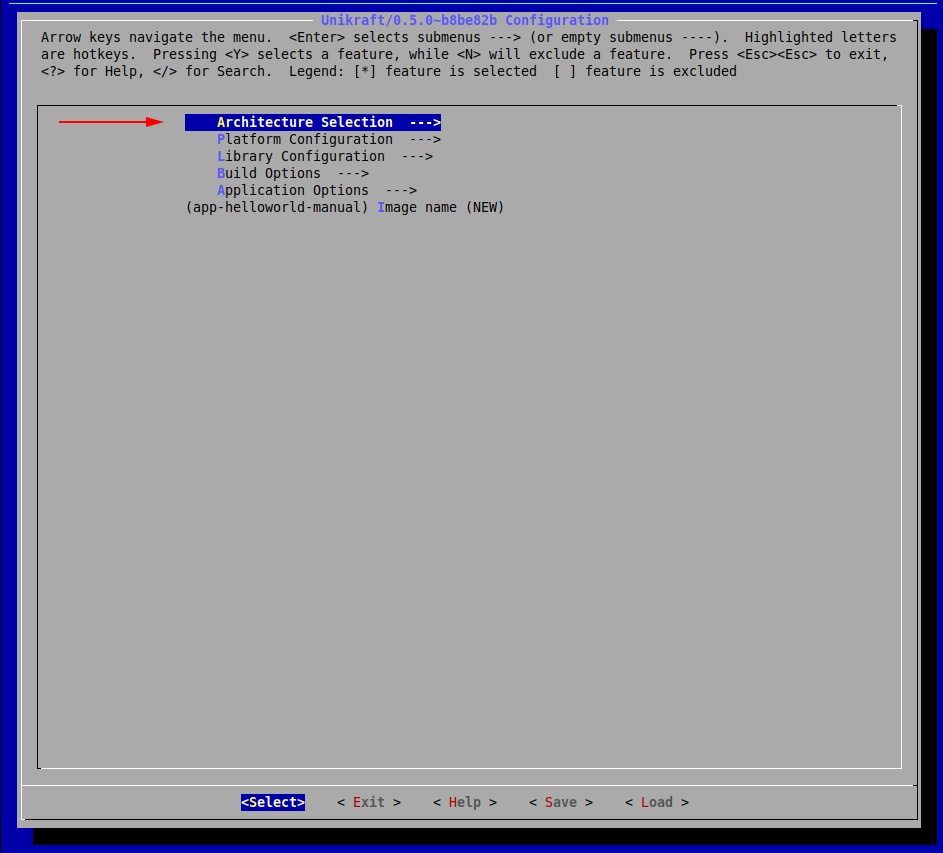

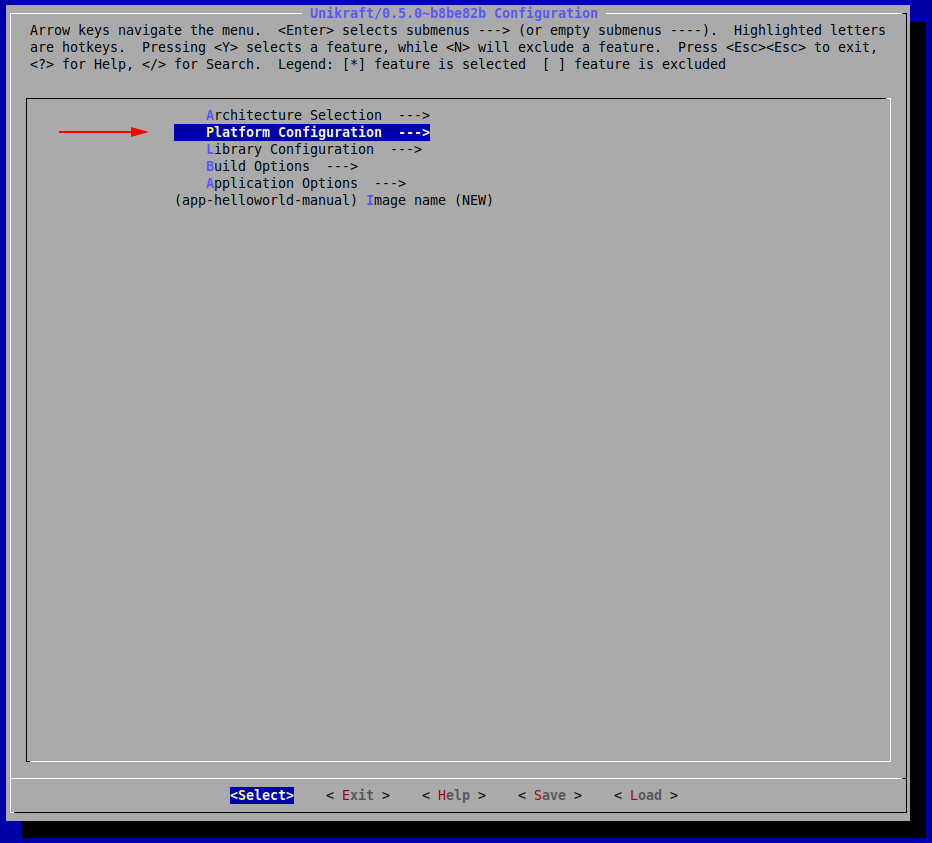

To configure the build process (and the resulting unikernel image) we access a text-user interface menu by using:

$ make menuconfig

Looks like we are met with an error:

$ make menuconfig

Makefile:9: recipe for target 'menuconfig' failed

make: *** [menuconfig] Error 2

We look in the Makefile:

$ cat -n Makefile

1 UK_ROOT ?= $(PWD)/../../unikraft

2 UK_LIBS ?= $(PWD)/../../libs

3 LIBS :=

4

5 all:

6 @$(MAKE) -C $(UK_ROOT) A=$(PWD) L=$(LIBS)

7

8 $(MAKECMDGOALS):

9 @$(MAKE) -C $(UK_ROOT) A=$(PWD) L=$(LIBS) $(MAKECMDGOALS)

The underlying build / configuration system expects the Unikernel (UK_ROOT) to be located at ../../unikraft from the current directory, which is very likely not the case.

Recall that the build system makes use of some important environment variables, namely UK_WORKDIR, UK_ROOT and UK_LIBS.

So, in order to properly inform the build system of our current location, we will have to manually set these by prefixing whatever build command we send with the hardcoded values of where our Unikraft work directory is.

$ UK_WORKDIR=~/.unikraft UK_ROOT=~/.unikraft/unikraft UK_LIBS=~/.unikraft/libs make menuconfig

Note: This menu is also available through the kraft menuconfig command, which rids you of the hassle of manually setting the environment variables.

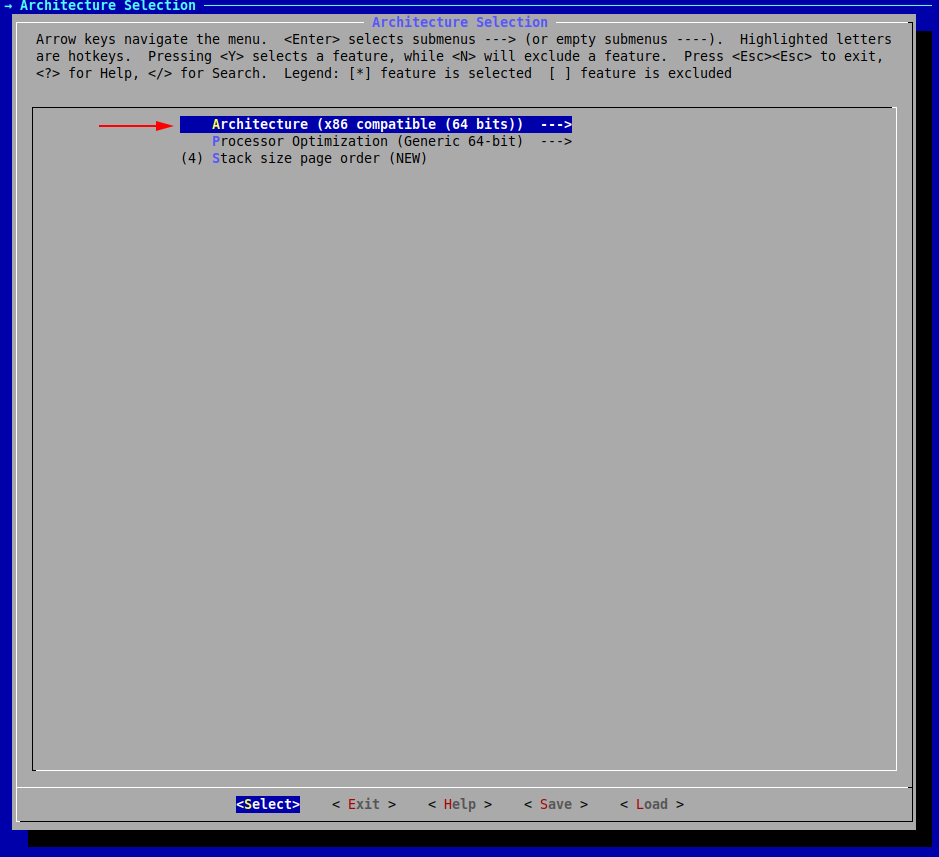

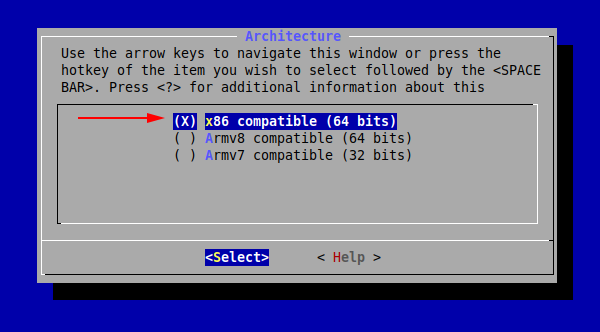

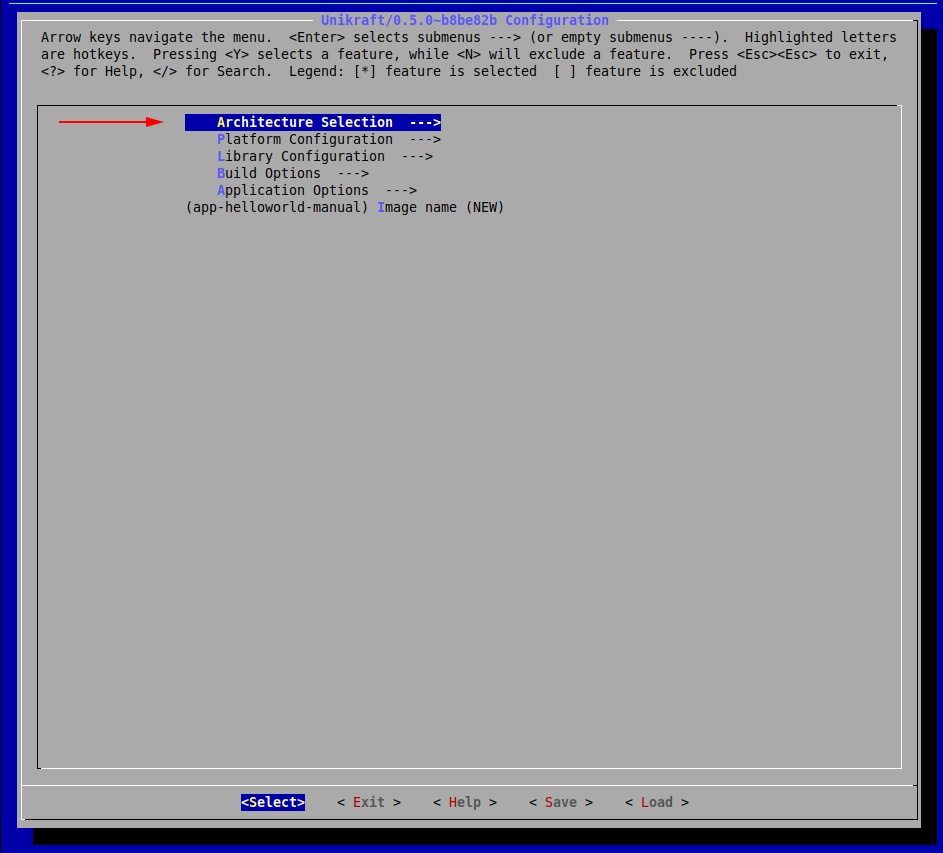

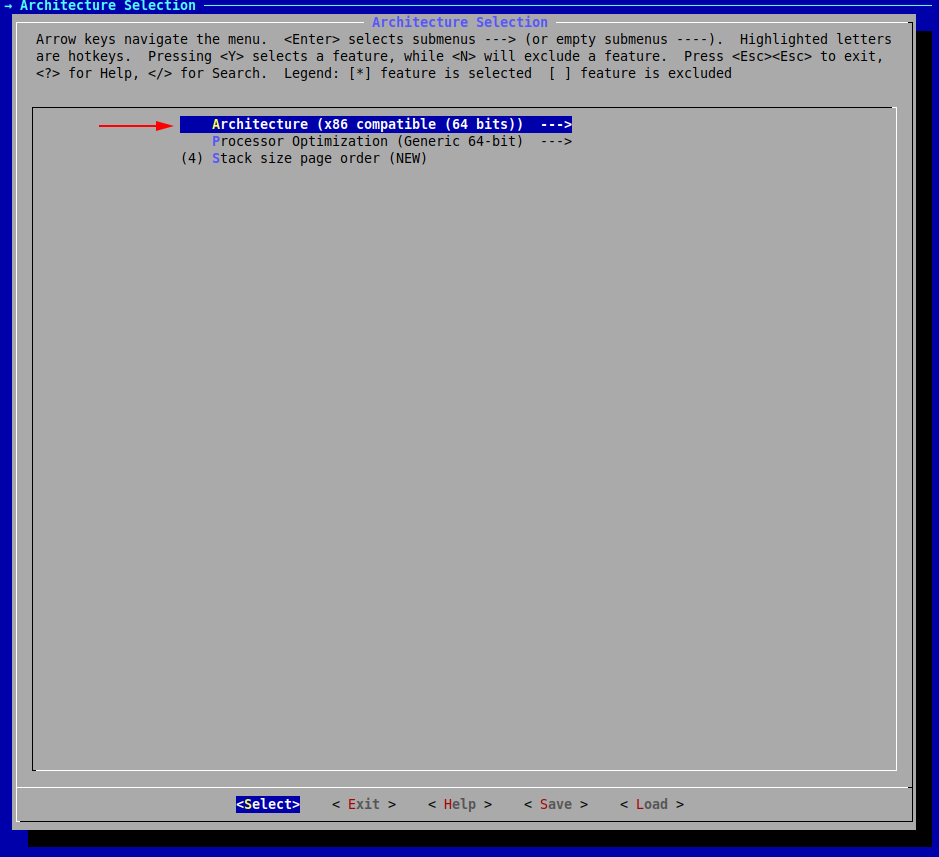

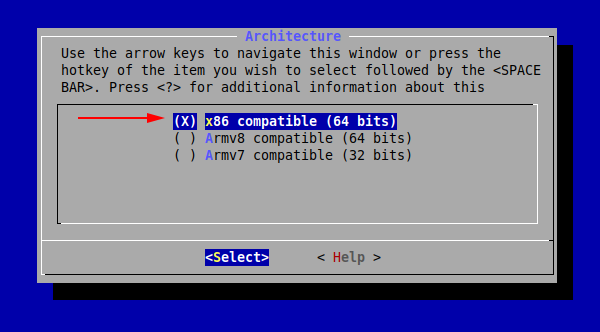

We are met with the following configuration menu. Let’s pick the architecture:

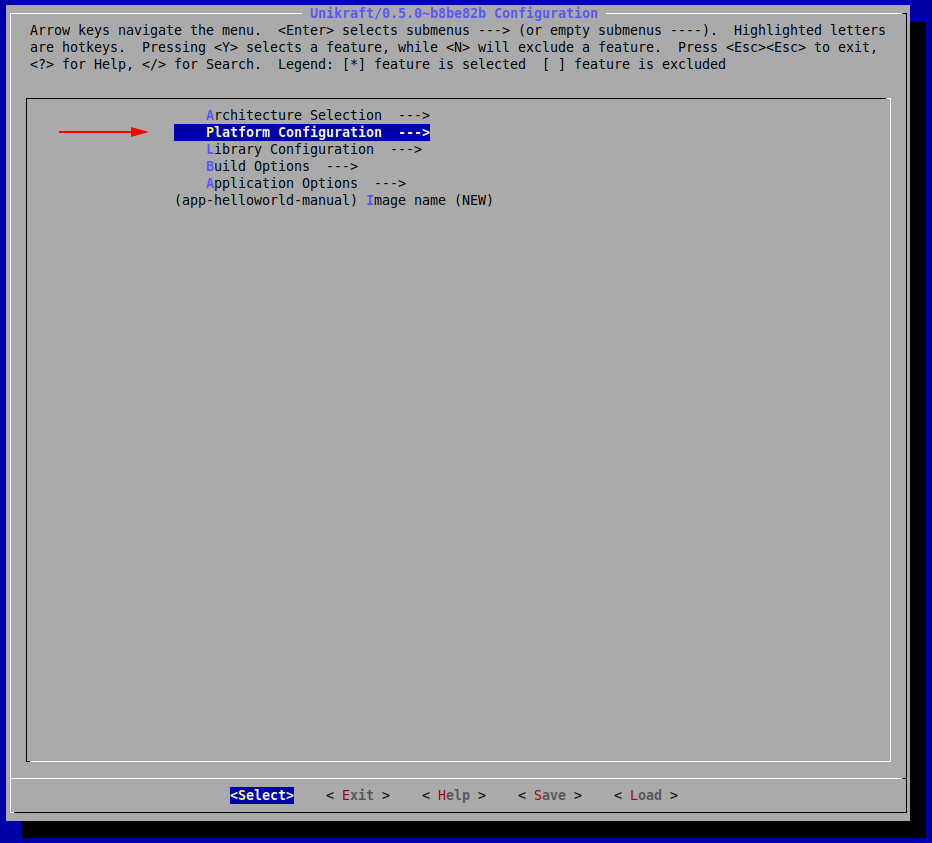

Now, press Exit (or hit the Esc key twice) until you return to the initial menu.

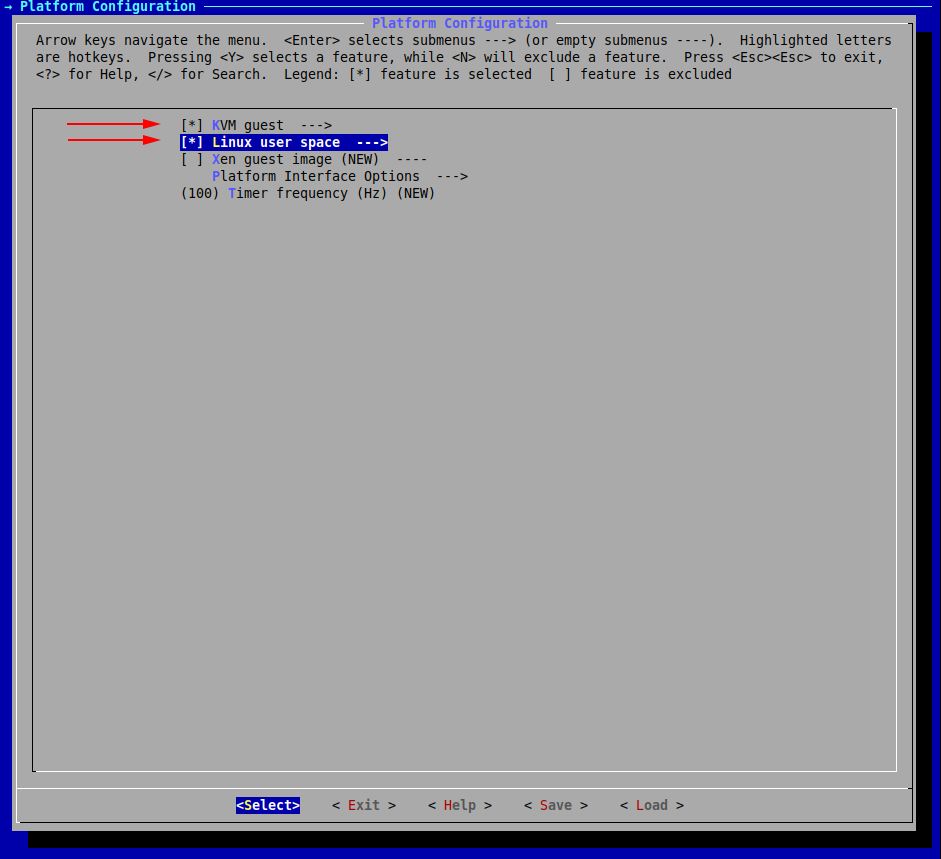

We have now set our desired architecture, let’s now proceed with the platform.

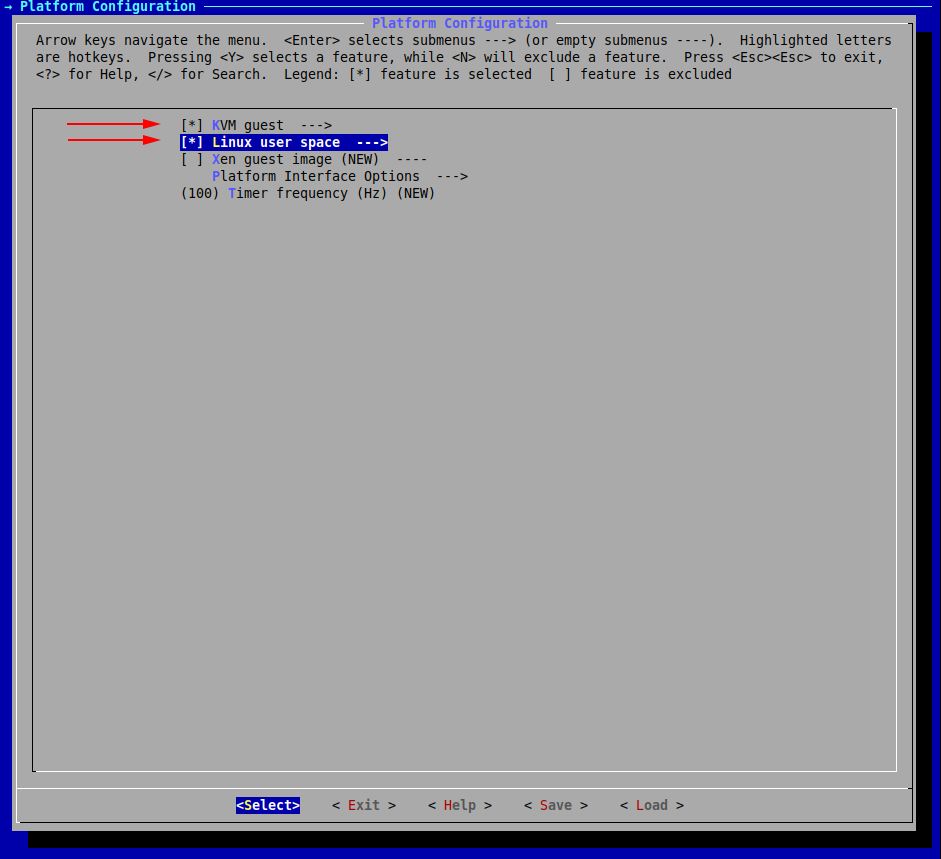

We will choose both linuxu and kvm:

Save and exit the configuration menu by repeatedly selecting Exit.

Build

Now let’s build the final image (recall the environment variables):

$ UK_WORKDIR=~/.unikraft UK_ROOT=~/.unikraft/unikraft UK_LIBS=~/.unikraft/libs make

[...]

LD 01-hello-world-manual_linuxu-x86_64.dbg

SCSTRIP 01-hello-world-manual_kvm-x86_64

GZ 01-hello-world-manual_kvm-x86_64.gz

SCSTRIP 01-hello-world-manual_linuxu-x86_64

LN 01-hello-world-manual_kvm-x86_64.dbg.gdb.py

LN 01-hello-world-manual_linuxu-x86_64.dbg.gdb.py

Our final binaries are located inside the build/ directory.

Run

Let’s run the linuxu image by doing a Linux-like executable running:

$ ./build/01-hello-world-manual_linuxu-x86_64 # The linuxu image

Powered by

o. .o _ _ __ _

Oo Oo ___ (_) | __ __ __ _ ' _) :_

oO oO ' _ `| | |/ / _)' _` | |_| _)

oOo oOO| | | | | (| | | (_) | _) :_

OoOoO ._, ._:_:_,\_._, .__,_:_, \___)

Tethys 0.5.0~b8be82b

Hello world!

To run the KVM image, we use the qemu-system-x86_64 command:

$ qemu-system-x86_64 -kernel build/01-hello-world-manual_kvm-x86_64 -nographic

Powered by

o. .o _ _ __ _

Oo Oo ___ (_) | __ __ __ _ ' _) :_

oO oO ' _ `| | |/ / _)' _` | |_| _)

oOo oOO| | | | | (| | | (_) | _) :_

OoOoO ._, ._:_:_,\_._, .__,_:_, \___)

Tethys 0.5.0~b8be82b

Hello world!

Arguments: "build/hello_kvm-x86_64"

02. Building and Running the httpreply Application

This is where we will take a look at how to build a basic HTTP Server both through kraft and manually.

The latter involves understanding how to integrate ported external libraries, such as lwip.

Using kraft

Just as before, let’s create a directory that will host the application.

We enter the demo/ directory of the current session and we create the 01-hello-world/ directory:

$ cd demo/

$ mkdir 02-httpreply

$ cd 02-httpreply/

Now, we go through the steps above.

Initialize

Retrieve the already existing template for httpreply:

$ kraft init -t httpreply

Configure the building of a KVM unikernel image for x86_64:

$ kraft configure -p kvm -m x86_64

Build

$ kraft build

Run

$ kraft run -p kvm -m x86_64

[...]

Powered by

o. .o _ _ __ _

Oo Oo ___ (_) | __ __ __ _ ' _) :_

oO oO ' _ `| | |/ / _)' _` | |_| _)

oOo oOO| | | | | (| | | (_) | _) :_

OoOoO ._, ._:_:_,\_._, .__,_:_, \___)

Tethys 0.5.0~b8be82b

Listening on port 8123...

Use Ctrl+c to stop the HTTP server running as a unikernel virtual machine.

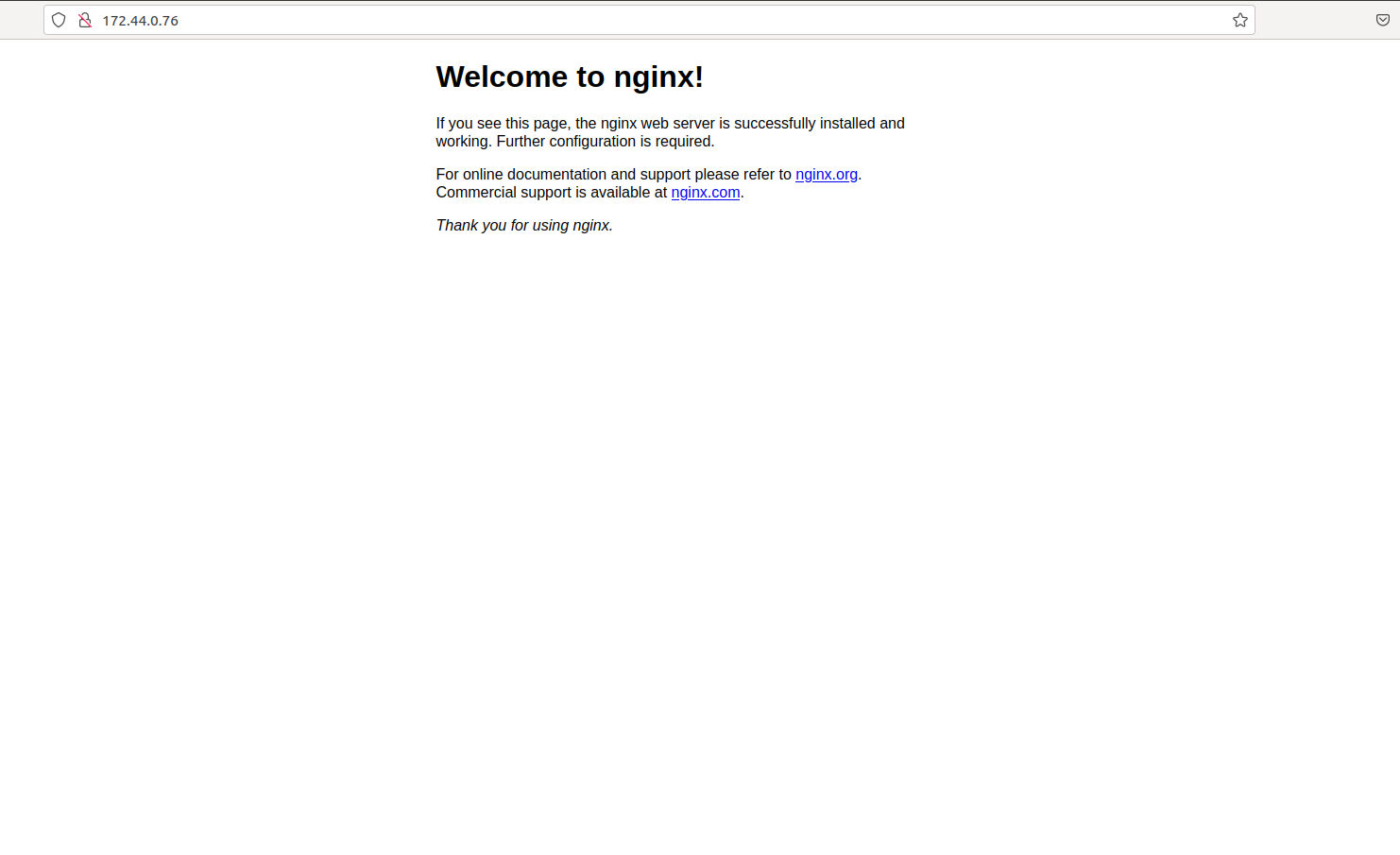

Connecting to the HTTP Server

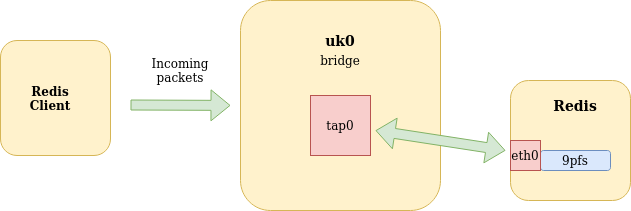

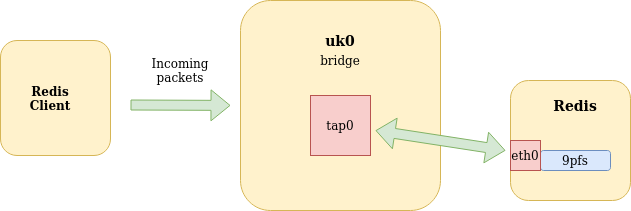

The server listens on port 8123 but we can’t access it, as the virtual machine doesn’t have a (virtual) network connection to the host system and it doesn’t have an IP address.

So we have to create a connection and assign an IP address.

We use a virtual bridge to create a connection between the VM and the host system.

We assign address 172.44.0.1/24 to the bridge interface (pointing to the host) and we assign address 172.44.0.2/24 to the virtual machine, by passing boot arguments.

We run the commands below to create and assign the IP address to the bridge virbr0:

$ sudo brctl addbr virbr0

$ sudo ip a a 172.44.0.1/24 dev virbr0

$ sudo ip l set dev virbr0 up

We can check the proper configuration:

$ ip a s virbr0

420: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 3a:3e:88:e6:a1:e4 brd ff:ff:ff:ff:ff:ff

inet 172.44.0.1/24 scope global virbr0

valid_lft forever preferred_lft forever

inet6 fe80::383e:88ff:fee6:a1e4/64 scope link

valid_lft forever preferred_lft forever

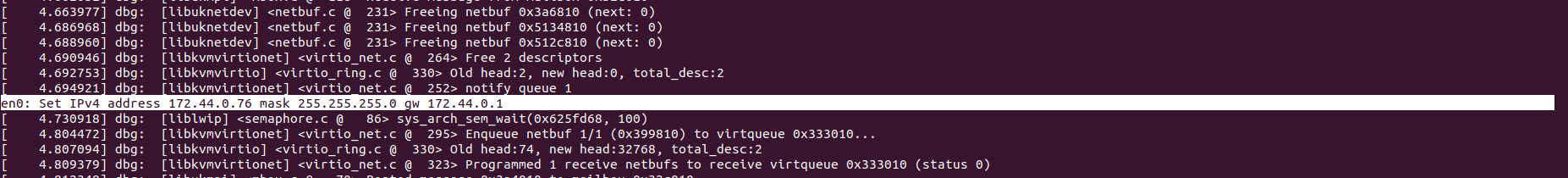

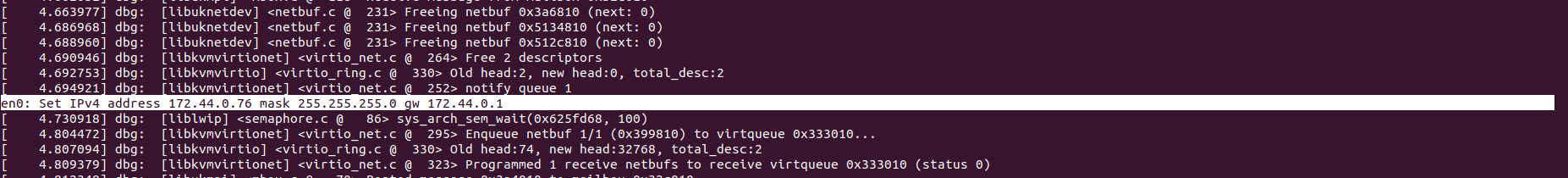

Now we start the virtual machine and pass it the proper arguments to assign the IP address 172.44.0.2/24:

$ kraft run -b virbr0 "netdev.ipv4_addr=172.44.0.2 netdev.ipv4_gw_addr=172.44.0.1 netdev.ipv4_subnet_mask=255.255.255.0 --"

[...]

0: Set IPv4 address 172.44.0.2 mask 255.255.255.0 gw 172.44.0.1

en0: Added

en0: Interface is up

Powered by

o. .o _ _ __ _

Oo Oo ___ (_) | __ __ __ _ ' _) :_

oO oO ' _ `| | |/ / _)' _` | |_| _)

oOo oOO| | | | | (| | | (_) | _) :_

OoOoO ._, ._:_:_,\_._, .__,_:_, \___)

Tethys 0.5.0~b8be82b

Listening on port 8123...

The boot message confirms the assigning of the 172.44.0.2/24 IP address to the virtual machine.

It’s listening on port 8123 for HTTP connections on that IP address.

We use wget to validate it’s working properly and we are able to get the index.html file:

$ wget 172.44.0.2:8123

--2021-08-18 16:47:38-- http://172.44.0.2:8123/

Connecting to 172.44.0.2:8123... connected.

HTTP request sent, awaiting response... 200 OK

[...]

2021-08-18 16:47:38 (41.5 MB/s) - ‘index.html’ saved [160]

Cleaning up means closing the virtual machine (and the HTTP server) and disabling and deleting the bridge interface:

$ sudo ip l set dev virbr0 down

$ sudo brctl delbr virbr0

The Manual Way

Initialize

First, move into a new directory and clone the httpreply repo there.

$ cd .. && mkdir 02-httpreply-manual && cd 02-httpreply-manual

$ git clone https://github.com/unikraft/app-httpreply .

Adding a Makefile

Unlike before, you can notice that this time we are missing the regular Makefile.

Let’s start by copying the Makefile from helloworld:

$ cp ../01-hello-world/Makefile .

This is how it looks like:

$ cat Makefile

UK_ROOT ?= $(PWD)/../../unikraft

UK_LIBS ?= $(PWD)/../../libs

LIBS :=

all:

@$(MAKE) -C $(UK_ROOT) A=$(PWD) L=$(LIBS)

$(MAKECMDGOALS):

@$(MAKE) -C $(UK_ROOT) A=$(PWD) L=$(LIBS) $(MAKECMDGOALS)

As you can see, the previously presented environment values make the same wrong assumption.

Previously, we fixed this by preceding the make command with the updated values for the environment variables, but we could have also simply modified them from within the Makefile, like so:

UK_ROOT ?= $(HOME)/.unikraft/unikraft

UK_LIBS ?= $(HOME)/.unikraft/libs

LIBS :=

@$(MAKE) -C $(UK_ROOT) A=$(PWD) L=$(LIBS)

$(MAKECMDGOALS):

@$(MAKE) -C $(UK_ROOT) A=$(PWD) L=$(LIBS) $(MAKECMDGOALS)

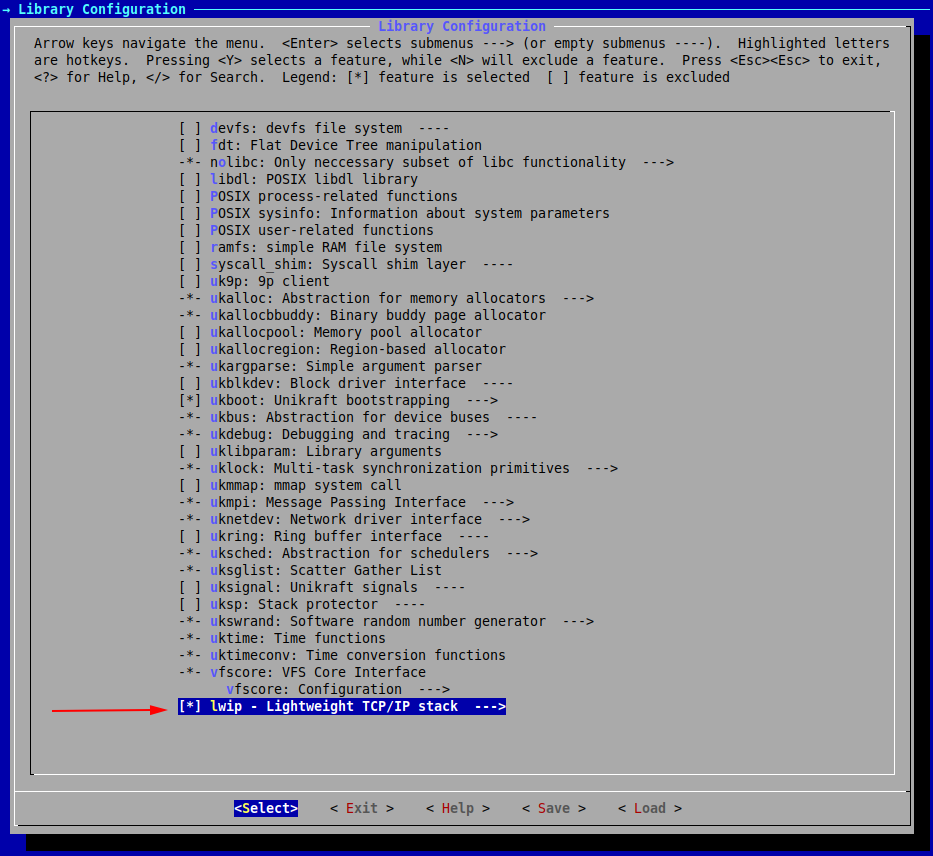

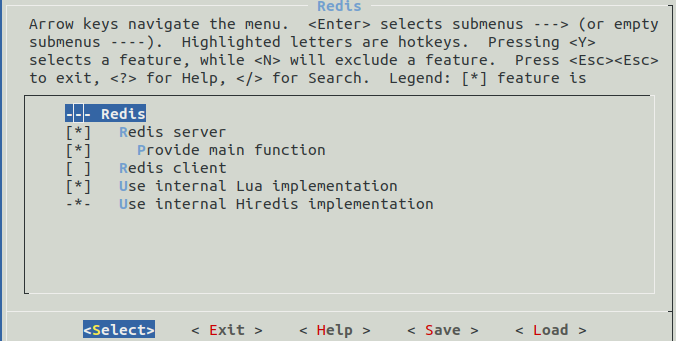

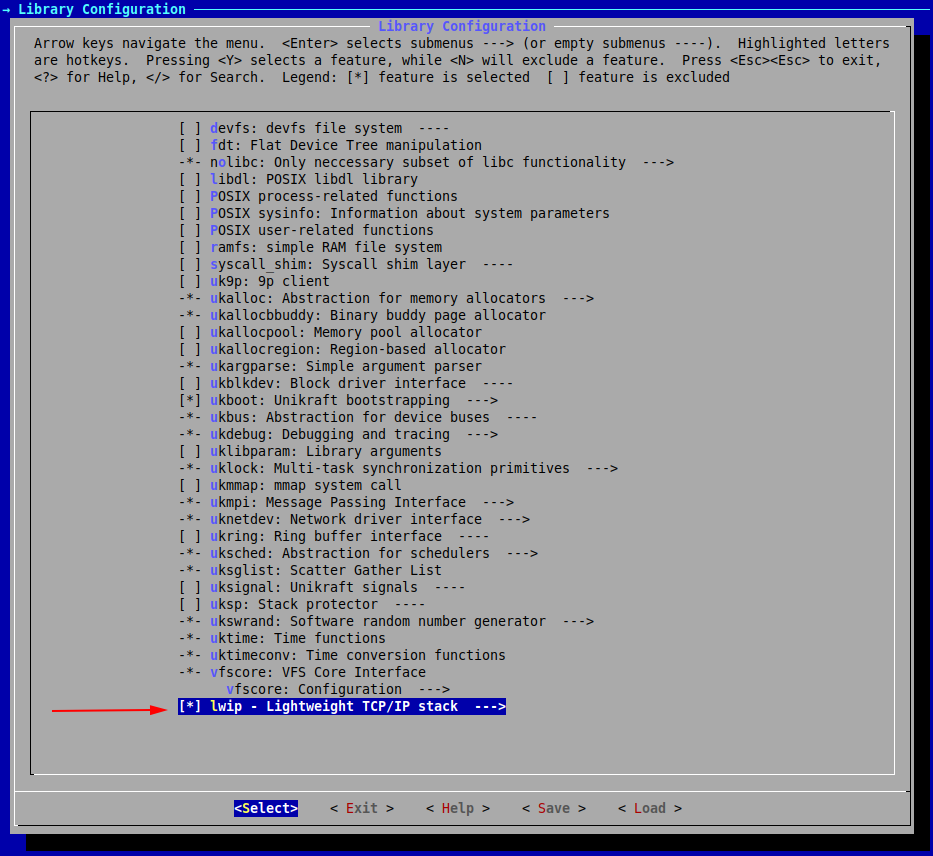

For the HTTP server, however, we need the lwip library, and we have to add it to the LIBS variable in the Makefile.

We add it by first downloading it on our system in $(UK_WORKDIR)/libs/:

$ git clone https://github.com/unikraft/lib-lwip ~/.unikraft/libs/lwip

fatal: destination path '~/.unikraft/libs/lwip' already exists and is not an empty directory.

The library is already cloned. That is because kraft took care of it for us behind the scenes in our previous automatic build.

The next step is to add this library in the Makefile:

UK_ROOT ?= $(HOME)/.unikraft/unikraft

UK_LIBS ?= $(HOME)/.unikraft/libs

LIBS := $(UK_LIBS)/lwip

all:

@$(MAKE) -C $(UK_ROOT) A=$(PWD) L=$(LIBS)

$(MAKECMDGOALS):

@$(MAKE) -C $(UK_ROOT) A=$(PWD) L=$(LIBS) $(MAKECMDGOALS)

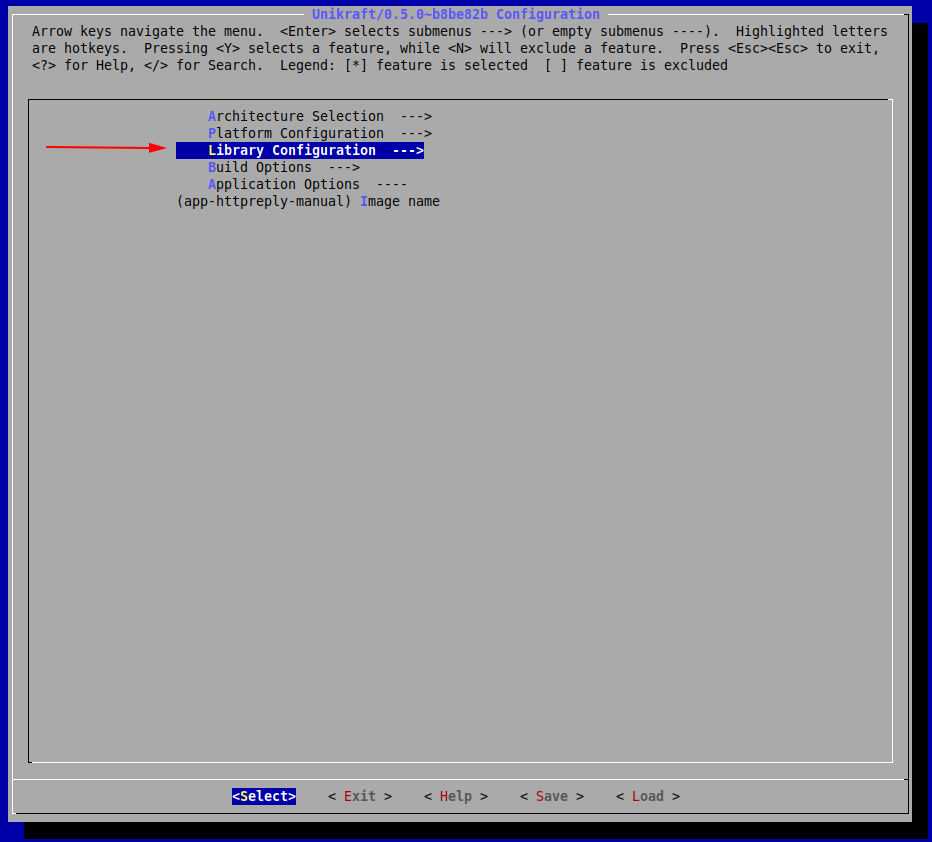

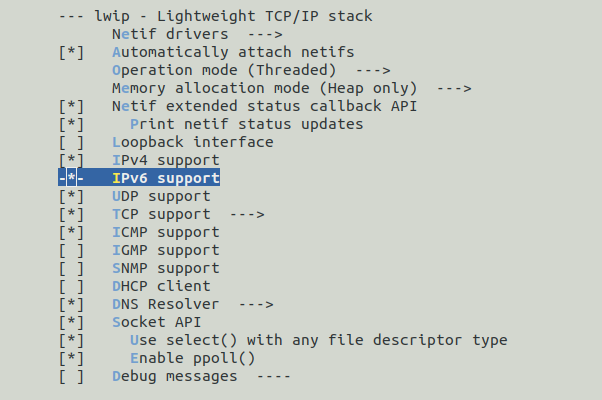

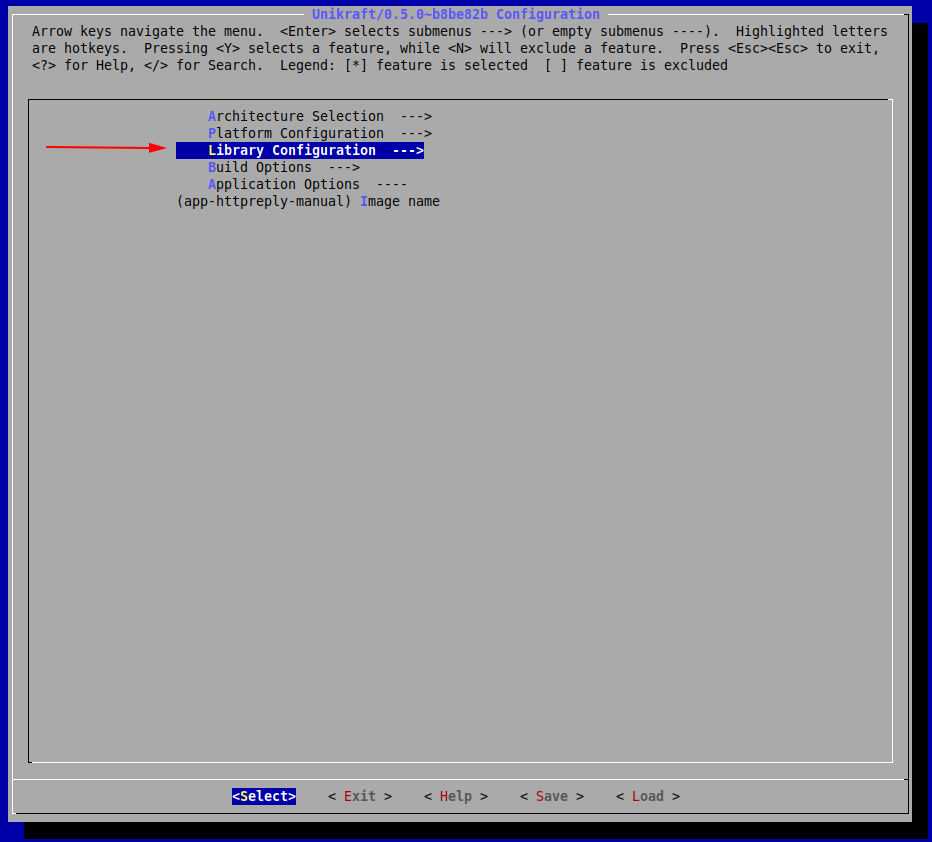

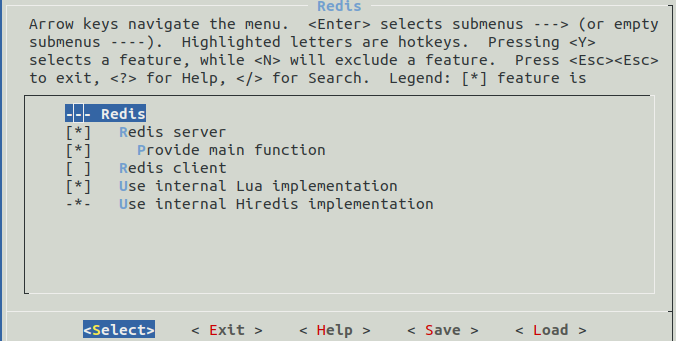

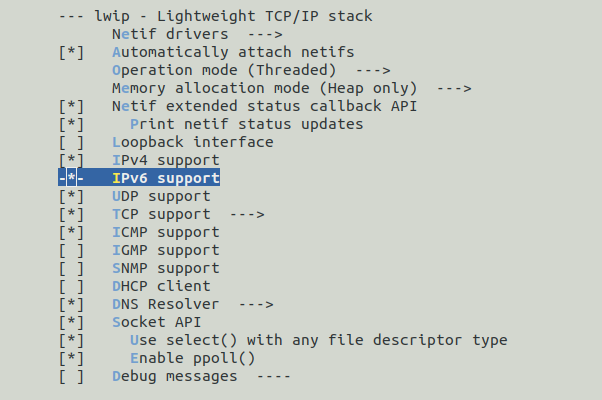

Now, we configure it through make menuconfig.

If you noticed, the menu also automatically selected some other internal components that would be required by lwip.

Now Save and Exit the configuration and run make.

Build

$ make

Run

To run the KVM image, we use the qemu-system-x86_64 command:

$ qemu-system-x86_64 -kernel build/02-httpreply-manual_kvm-x86_64 -nographic

[...]

Powered by

o. .o _ _ __ _

Oo Oo ___ (_) | __ __ __ _ ' _) :_

oO oO ' _ `| | |/ / _)' _` | |_| _)

oOo oOO| | | | | (| | | (_) | _) :_

OoOoO ._, ._:_:_,\_._, .__,_:_, \___)

Tethys 0.5.0~b8be82b

Listening on port 8123...

To close the running QEMU process, use the Ctrl+a x key combination.

Note: We didn’t go into configuring a functional network connection and actually querying the HTTP server.

This is a bit more complicated and is outside the scope of this session.

sudo qemu-system-x86_64 -netdev bridge,id=en0,br=virbr0 -device virtio-net-pci,netdev=en0 -append "netdev.ipv4_addr=172.44.0.2 netdev.ipv4_gw_addr=172.44.0.1 netdev.ipv4_subnet_mask=255.255.255.0 --" -kernel build/02-httpreply-manual_kvm-x86_64 -nographic

Connecting to the HTTP Server

Similarly to kraft, in order to connect to the HTTP server, we use a virtual bridge to create a connection between the VM and the host system.

We assign address 172.44.0.1/24 to the bridge interface (pointing to the host) and we assign address 172.44.0.2/24 to the virtual machine, by passing boot arguments.

We run the commands below to create and assign the IP address to the bridge virbr0:

$ sudo brctl addbr virbr0

$ sudo ip a a 172.44.0.1/24 dev virbr0

$ sudo ip l set dev virbr0 up

Now we start the virtul machine and pass it the proper arguments to assing the IP address 172.44.0.2/24:

$ sudo qemu-system-x86_64 -netdev bridge,id=en0,br=virbr0 -device virtio-net-pci,netdev=en0 -append "netdev.ipv4_addr=172.44.0.2 netdev.ipv4_gw_addr=172.44.0.1 netdev.ipv4_subnet_mask=255.255.255.0 --" -kernel build/02-httpreply-manual_kvm-x86_64 -nographic

0: Set IPv4 address 172.44.0.2 mask 255.255.255.0 gw 172.44.0.1

en0: Added

en0: Interface is up

Powered by

o. .o _ _ __ _

Oo Oo ___ (_) | __ __ __ _ ' _) :_

oO oO ' _ `| | |/ / _)' _` | |_| _)

oOo oOO| | | | | (| | | (_) | _) :_

OoOoO ._, ._:_:_,\_._, .__,_:_, \___)

Tethys 0.5.0~b8be82b

Listening on port 8123...

[...]

The boot message confirms the assigning of the 172.44.0.2/24 IP address to the virtual machine.

It’s listening on port 8123 for HTTP connections on that IP address.

We use wget to validate it’s working properly and we are able to get the index.html file:

$ wget 172.44.0.2:8123

--2021-08-18 16:47:38-- http://172.44.0.2:8123/

Connecting to 172.44.0.2:8123... connected.

HTTP request sent, awaiting response... 200 OK

[...]

2021-08-18 16:47:38 (41.5 MB/s) - ‘index.html’ saved [160]

Cleaning up means closing the virtual machine (and the HTTP server) and disabling and deleting the bridge interface:

$ sudo ip l set dev virbr0 down

$ sudo brctl delbr virbr0

Summary

kraftis an extremely useful tool for quickly deploying unikernel images.

It abstracts away many factors that would normally increase the difficulty of such tasks.

Through just a simple set of a few commands, we can build and run a set of fast and secure unikernel images with low memory footprint.

Practical Work

01. Echo-back Server

You will have to implement a simple echo-back server in C for the KVM platform.

The application will have to be able to open a socket on 172.44.0.2:1234 and send back to the client whatever the client sends to the server.

If the client closes the connection, the server will automatically close.

Enter the work/01-echo-back/ directory.

Check the source code file (main.c) and support files.

Work on the contents to have a viable echo-back server implementation.

Things to consider:

- You will need some network client utility such as

netcat. - You will need the Lightweight TCP/IP stack library (lwip): https://github.com/unikraft/lib-lwip

- You will have to update the build and support files in the

work/01-echo-back/ directory. - If you want to run the application without

kraft, the KVM launch script and network setup are already included inside work/01-echo-back/launch.sh.

To test if your application works you can try sending it messages like so:

$ nc 172.44.0.2 1234

After connecting to the server, whatever you enter in standard input, should be echoed back to you.

02. ROT-13

Update the previously built application, to echo back a rot-13 encoded message.

To do this, you will have to create a custom function inside lwip (~/.unikraft/libs/lwip/) that your application (from the new directory work/02-rot13) can call in order to encode the string.

For example, you could implement the function void rot13(char *msg); inside ~/.unikraft/libs/lwip/sockets.c and add its header inside ~/.unikraft/libs/lwip/include/sys/socket.h.

The required resources are the exact same as in the previous exercise, you will just have to update lwip.

To test if this works, use the same methodology as before, but ensure that the echoed back string is encoded.

03. Tutorial: Mount 9pfs

In this tutorial, we will see what we would need to do if we wanted to have a filesystem available.

To make it easy, we will use the 9pfs filesystem, as well as the newlib library.

The latter is used so that we have available an API that would enable us to interact with this filesystem (functions such as lseek, open).

Note: the build will fail if unikraft and newlib repositories aren’t both on the staging or the stable branches.

To avoid this situation, go to ~/.unikraft/unikraft and checkout branch staging:

cd ~/.unikraft/unikraft

git checkout staging

We will need to download newlib:

git clone https://github.com/unikraft/lib-newlib.git ~/.unikraft/libs/newlib

Next, we include it in our Makefile:

LIBS := $(UK_LIBS)/lwip:$(UK_LIBS)/newlib

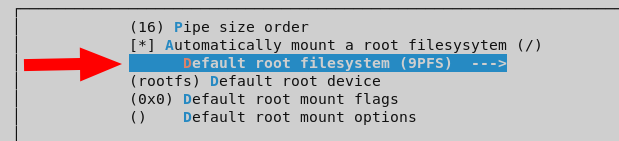

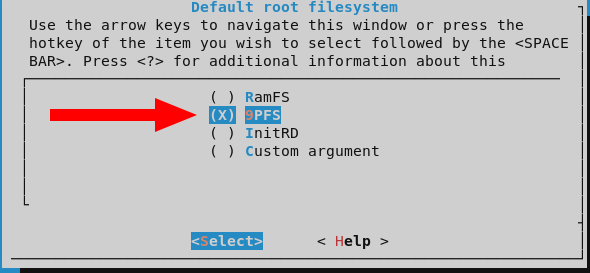

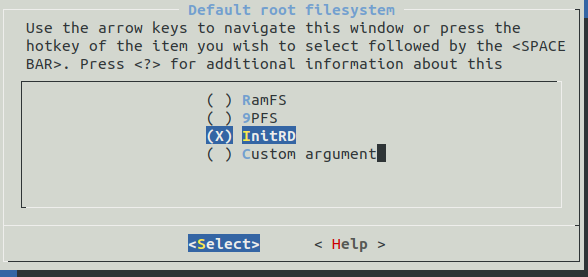

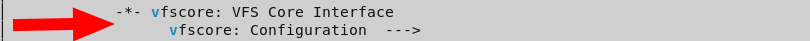

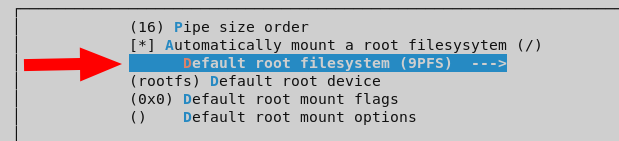

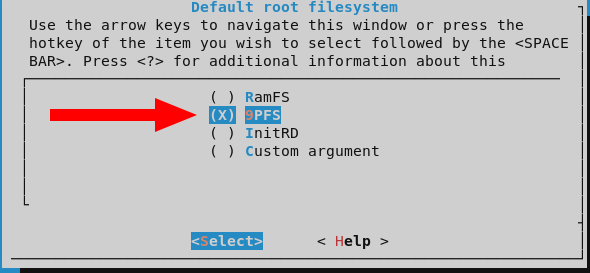

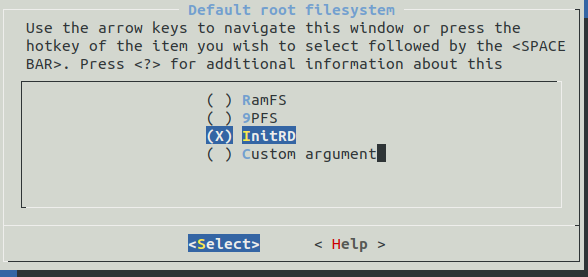

And now, for the final step, through make menuconfig make sure you have selected libnewlib as well as 9pfs: 9p filesystem inside the Library Configuration menu.

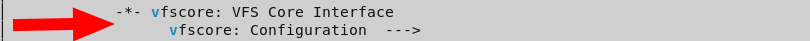

We will also check these options inside Library Configuration -> vfscore: Configuration:

What is more, you should also have present in the current directory an additional directory called fs0:

mkdir fs0

And so, fs0 will contain whatever files you create, read from or write to from within your unikernel.

For now, just make sure it successfully builds. If it does, move on to the next work item.

04. Store Strings

For the final work item, you will have to update the source code from the second task, so that it stores in a file the received string before sending the encoded one back to the client.

In order to achieve this, you must have the previous work item completed.

The available resources are the exact same, you will simply have to modify main.c.

To test if your application ran successfully, check to see whether the original strings you sent through the client are present in that file or not.

Further Reading

Unikraft Documentation

4 - Session 02: Behind the Scenes

Reminders

Kraft

Kraft is the tool developed by the Unikraft team, to make application deployment easier.

To automatically download, configure, build and run an application, for example Helloworld, run

$ kraft list update

$ kraft up -t helloworld@staging ./my-first-unikernel

If you are already working with cloned / forked repositories from Unikraft, kraft can also help you configure, build and run you application.

kraft up can be broken down into the following commands:

$ kraft configure

$ kraft build

$ kraft run

For this session, the following tools are needed: qemu-kvm, qemu-system-x86_64, qemu-system-aarch64, gcc-aarch64-linux-gnu.

To install on Debian/Ubuntu use the following command

$ sudo apt-get -y install qemu-kvm qemu-system-x86 qemu-system-arm gcc-aarch64-linux-gnu

Support Files

Session support files are available in the repository.

If you already cloned the repository, update it and enter the session directory:

$ cd path/to/repository/clone

$ git pull --rebase

$ cd content/en/docs/sessions/02-behind-scenes/

$ ls

demo/ images/ index.md sol/

If you haven’t cloned the repository yet, clone it and enter the session directory:

$ git clone https://github.com/unikraft/summer-of-code-2021

$ cd summer-of-code-2021/content/en/docs/sessions/02-behind-scenes/

$ ls

demo/ images/ index.md sol/

Overview

01. Virtualization

Through virtualization, multiple operating systems (OS) are able to run on the same hardware, independently, thinking that each one of them controls the entire system.

This can be done using a hypervisor, which is a low-level software that virtualizes the underlying hardware and manages access to the real hardware, either directly or through the host Operating System.

There are 2 main virtualized environments: virtual machines and containers, each with pros and cons regarding complexity, size, performance and security.

Unikernels come somewhere between those 2.

Virtual Machines

Virtual machines represent an abstraction of the hardware, over which an operating system can run, thinking that it is alone on the system and that it controls the hardware below it.

Virtual machines rely on hypervisors to run properly.

Those hypervisors can be classified in 2 categories: Type 1 and Type 2.

We won’t go in depth into them, but it is good to know how they are different:

- The Type 1 hypervisor, also known as bare-metal hypervisor, has direct access to the hardware and controls all the operating systems that are running on the system.

KVM, despite the appearances, is a Type 1 hypervisor.

- The Type 2 hypervisor, also known as hosted hypervisor, has to go through the host operating system to reach the hardware.

An example of Type 2 hypervisor is VirtualBox.

|  |

|---|

| Operating systems over type 1 hypervisor | Operating systems over type 2 hypervisor |

Containers

Containers are environments designed to contain and run only one application and its dependencies.

This leads to very small sizes.

The containers are managed by a Container Management Engine, like Docker, and are dependent on the host OS, as they cannot run without it.

|

|---|

| Containers |

Unikraft

Unikraft has a size comparable with that of a container, while it retains the power of a virtual machine, meaning it can directly control the hardware components (virtualized, or not, if running bare-metal).

This gives it an advantage over classical Operating Systems.

Being a special type of operating system, Unikraft can run bare-metal or over a hypervisor.

|  |

|---|

| Unikraft over Type 1 hypervisor | Unikraft over Type 2 hypervisor |

The following table makes a comparison between regular Virtual Machines (think of an Ubuntu VM), Containers and Unikernels, represented by Unikraft:

| Virtual Machines | Containers | Unikernels |

|---|

| Time performance | Slowest of the 3 | Fast | Fast |

| Memory footprint | Heavy | Depends on the number of features | Light |

| Security | Very secure | Least secure of the 3 | Very secure |

| Features | Everything you would think of | Depends on the needs | Only the absolute necessary |

02. linuxu and KVM

Unikraft can be run in 2 ways:

- As a virtual machine, using QEMU/KVM or Xen.

It acts as an operating system, having the responsibility to configure the hardware components that it needs (clocks, additional processors, etc).

This mode gives Unikraft direct and total control over hardware components, allowing advanced functionalities.

- As a

linuxu build, in which it behaves as a Linux user-space application.

This severely limits its performance, as everything Unikraft does must go through the Linux kernel, via system calls.

This mode should be used only for development and debugging.

When Unikraft is running using QEMU/KVM, it can either be run on an emulated system or a (para)virtualized one.

Technically, KVM means virtualization support is enabled.

If using QEMU in emulated mode, KVM is not used.

To keep things simple, we will use interchangeably the terms QEMU, KVM or QEMU/KVM to refer to this use (either virtualized, or emulated).

Emulation is slower, but it allows using CPU architectures different from the local one (you can run ARM code on a x86 machine).

Using (para)virtualisation, aka hardware acceleration, greater speed is achieved and more hardware components are visible to Unikraft.

03. Unikraft Core

The Unikraft core is comprised of several components:

- the architecture code:

This defines behaviours and hardware interactions specific to the target architecture (x86_64, ARM, RISC-V).

For example, for the x86_64 architecture, this component defines the usable registers, data types sizes and how Thread-Local Storage should happen.

- the platform code:

This defines interaction with the underlying hardware, depending on whether a hypervisor is present or not, and which hypervisor is present.

For example, if the KVM hypervisor is present, Unikraft will behave almost as if it runs bare-metal, needing to initialize the hardware components according to the manufacturer specifications.

The difference from bare-metal is made only at the entry, where some information, like the memory layout, the available console, are supplied by the bootloader (Multiboot) and there’s no need to interact with the BIOS or UEFI.

In the case of Xen, many of the hardware-related operations must be done through hypercalls, thus reducing the direct interaction of Unikraft with the hardware.

- internal libraries:

These define behaviour independent of the hardware, like scheduling, networking, memory allocation, basic file systems.

These libraries are the same for every platform or architecture, and rely on the platform code and the architecture code to perform the needed actions.

The internal libraries differ from the external ones in the implemented functionalities.

The internal ones define parts of the kernel, while the external ones define user-space level functionalities.

For example, uknetdev and lwip are 2 libraries that define networking components.

Uknetdev is an internal library that interacts with the network card and defines how packages are sent using it.

Lwip is an external library that defines networking protocols, like IP, TCP, UDP.

This library knows that the packages are somehow sent over the NIC, but it is not concerned how.

That is the job of the kernel.

04. libc in Unikraft

The Unikraft core provides only the bare minimum components to interact with the hardware and manage resources.

A software layer, similar to the standard C library in a general-purpose OS, is required to make it easy to run applications on top of Unikraft.

Unikraft has multiple variants of a libc-like component:

- nolibc is a minimalistic libc, part of the core Unikraft code, that contains only the functionality needed for the core (strings, qsort, etc).

- isrlib is the interrupt-context safe variant of nolibc.

It is used for interrupt handling code.

- newlibc is the most complete libc currently available for Unikraft, but it still lacks some functionalities, like multithreading.

Newlibc was designed for embedded environments.

- musl is, theoretically, the best libc that will be used by Unikraft, but it’s currently in testing.

Nolibc and isrlib are part of the Unikraft core.

Newlibc and musl are external libraries, from the point of view of Unikraft, and they must be included to the build, as shown in Session 01: Baby Steps.

05. Configuring Unikraft - Config.uk

Unikraft is a configurable operating system, where each component can be modified, configured, according to the user’s needs.

This configuration is done using a version of Kconfig, through the Config.uk files.

In these files, options are added to enable libraries, applications and different components of the Unikraft core.

The user can then apply those configuration options, using make menuconfig, which generates an internal configuration file that can be understood by the build system, .config.

Once configured, the Unikraft image can be built, using make, and run, using the appropriate method (Linux ELF loader, qemu-kvm, xen, others).

Configuration can be done in 3 ways:

Manually, using

$ make menuconfig

Adding a dependency in Config.uk for a component, so that the dependency gets automatically selected when the component is enabled.

This is done using depends on and select keywords in Config.uk.

The configuration gets loaded and the .config file is generated by running

$ make menuconfig

This type of configuration removes some configuration steps, but not all of them.

Writing the desired configuration in kraft.yaml.

The configuration gets loaded and the .config file is generated by running

$ kraft configure

In this session, we will use the first and the last configuration options.

06. The Build System - basics

Once the application is configured, in .config, symbols are defined (e.g. CONFIG_ARCH_X86_64).

Those symbols are usable both in the C code, to include certain functionalities only if they were selected in the configuring process, and in the actual building process, to include / exclude source files, or whole libraries.

This last thing is done in Makefile.uk, where source code files are added to libraries.

During the build process, all the Makefile.uk files (from the Unikraft core and external libraries) are evaluated, and the selected files are compiled and linked, to form the Unikraft image.

|

|---|

| The build process of Unikraft |

Summary

- Unikraft is a special type of operating system, that can be configured to match the needs of a specific application.

- This configuration is made possible by a system based on Kconfig, that uses Config.uk files to add possible configurations, and .config files to store the specific configuration for a build.

- The configuration step creates symbols that are visible in both Makefiles and source code.

- Each component has its own Makefile.uk, where source files can be added, removed, or be made dependent on the configuration.

- Unikraft has an internal libc, but it can use others, more complex and complete, like newlib and musl.

- Being an operating system, it needs to be run by a hypervisor, like KVM, xen, to work at full capacity.

It can also be run as an ELF, in Linux, but in this way the true power of Unikraft is not achieved.

Work Items

Support Files

Session support files are available in the repository.

If you already cloned the repository, update it and enter the session directory:

$ cd path/to/repository/clone

$ git pull --rebase

$ cd content/en/docs/sessions/02-behind-scenes/

$ ls

demo/ images/ index.md sol/

If you haven’t cloned the repository yet, clone it and enter the session directory:

$ git clone https://github.com/unikraft/summer-of-code-2021

$ cd summer-of-code-2021/content/en/docs/sessions/02-behind-scenes/

$ ls

demo/ images/ index.md sol/

01. Tutorial / Reminder: Building and Running Unikraft

We want to build the Helloworld application, using the Kconfig-based system, for the linuxu and KVM platforms, for the ARM and x86 architectures, and then run them.

If you don’t have the unikraft and app-helloworld repositories cloned already, do so, by running the following commands:

$ git clone https://github.com/unikraft/unikraft

$ cd apps

$ git clone https://github.com/unikraft/app-helloworld helloworld/

As you can see from the commands above, it is recommended to have the following file structure in your working directory:

workdir

|_______apps

| |_______helloworld

|_______libs

|_______unikraft

Make sure that UK_ROOT and UK_LIBS are set correctly in the Makefile file, in the helloworld folder.

If you are not sure if they are set correctly, set them like this:

UK_ROOT ?= $(PWD)/../../unikraft

UK_LIBS ?= $(PWD)/../../libs

Linuxu, x86_64

First, we will the image for the linuxu platform.

As the resulting image will be an ELF, we can only run the x86 Unikraft image.

We follow the steps:

While in the helloworld folder, run

$ make menuconfig

From Architecture Selection, select Architecture -> x86 compatible.

From Platform Configuration, select Linux user space.

Save, exit and run

$ make

The resulting image, app-helloworld_linuxu-x86_64 will be present in the build/ folder.

Run it.

$ ./build/app-helloworld_linuxu-x86_64

KVM, x86_64

Next, we will build the image for the kvm platform.

Before starting the process, make sure that you have the necessary tools, listed in the Required Tools section.

We follow the steps:

Run

$ make menuconfig

We will leave the architecture as is, for now.

From Platform Configuration, select KVM guest.

Save, exit and run

$ make

Load the resulting image in QEMU by using

$ sudo qemu-system-x86_64 -kernel ./build/app-helloworld_kvm-x86_64 -serial stdio

Besides -serial stdio, no other option is needed to run the Helloworld application.

Other, more complex applications, will require more options given to qemu.

We have run Unikraft in the emulation mode, with the command from above.

We can also run it in the virtualization mode, by adding the -enable-kvm option.

You may receive a warning, host doesn't support requested feature:.

This is because kvm uses a generic CPU model.

You can instruct kvm to use your local CPU model, by adding -cpu host to the command.

The final command will look like this:

$ sudo qemu-system-x86_64 -enable-kvm -cpu host -kernel ./build/app-helloworld_kvm-x86_64 -serial stdio

While we are here, we can check some differences between emulation and virtualization.

Record the time needed by each image to run, using time, like this:

$ time sudo qemu-system-x86_64 -kernel ./build/app-helloworld_kvm-x86_64 -serial stdio

$ time sudo qemu-system-x86_64 -enable-kvm -cpu host -kernel ./build/app-helloworld_kvm-x86_64 -serial stdio

Because helloworld is a simple application, the real running time will be similar.

The differences are where each image runs most of its time: in user space, or in kernel space.

Find an explanation to those differences.

KVM, ARM

To configure Unikraft for the ARM architecture, go to the configuration menu, like before, and select, from Architecture Selection, Armv8 compatible.

Save and exit the configuration.

As a new architecture is selected, you have to clean the previously compiled files:

$ make clean

After cleaning, build the image:

$ make

To run Unikraft, use the following command:

$ sudo qemu-system-aarch64 -machine virt -cpu cortex-a57 -kernel ./build/app-helloworld_kvm-arm64 -serial stdio

Note that now we need to provide a machine and a CPU model to be emulated, as there are no defaults available.

If you want to find information about other machines, run

$ sudo qemu-system-aarch64 -machine help

02. Tutorial: Make It Speak

The goal of this exercise is to enable the internal debugging library for Unikraft (ukdebug) and make it display messages up to the info level.

We also want to identify which hardware components are initialized for both x86 and ARM, and where.

ARM

Considering that the last exercise ended with an ARM image, we will start now with that configuration.

We need to enable ukdebug in the configuration menu.

It is located in the Library Configuration menu.

But, for this exercise, besides enabling a component, we must modify it.

Enter the ukdebug configuration menu.

We need to have Enable kernel messages (uk_printk) checked.

Also, we need to change the option below it, Kernel message level, from Show critical and error messages (default) to Show all types of messages.

To make thing prettier, also enable the Colored output option.

Save and exit the configuration, then build and run the image.

We have a bunch of initializations happening, before seeing the “Hello world!” message.

Let’s break them down. We start with the platform internal library, libkvmplat.

Here, the hardware components are initialized, like the Serial module, PL001 UART, and the GIC, which is the interrupt controller.

After that, the memory address space is defined, and the booting process starts, by replacing the current stack with a larger one, that is part of the defined address space.

Lastly, before calling the main function of the application, the software components of Unikraft are initialized, like timers, interrupts, and bus handlers.

The execution ends in in the platform library, with the shutdown command.

x86_64

For the x86 part, just change the architecture in the configuration interface.

Recall that, after changing the architecture, we have to clean the previously compiled files:

$ make clean

Build Unikraft:

$ make

And run in under QEMU/KVM.

The output differs.

We can see that, in the case of x86, the platform library initializes less components, or it is less verbose than the ARM one.

But the timer and bus initialization is more verbose.

We see what timer is used, the i8254 one.

Also, we see that the PCI bus is used.

If you are wondering what the Constructors are, they will be covered in Session 06: Testing Unikraft

03. More Messages

Sometimes we need a more detailed output.

For this, ukdebug has the option to show debug level messages.

Enable them and run Unikraft, for either ARM or x86 architectures, or both.

04. Going through the Code

Having the output of ukdebug, go through the Unikraft code, in the unikraft folder.

Find the components that you have seen in the outputs, in the platform library, and where the kernel messages are sent.

The platform library, even though is called a library, is not in the lib subfolder.

It is placed in the plat folder.

Explore the code, at your own pace.

Can you also find where the main function is called?

05. I Have an Important Message

Send an important kernel message, that everyone needs to see, right before the main function is called.

Try different message levels (critical, error, warning, info, debug), to see how they differ.

Note: sending a critical kernel message will not affect how Unikraft runs after the message.

06. Tutorial / Reminder: Adding Filesystems to an Application

For this tutorial, the aim is to create a simple QEMU/KVM application that reads from a file and displays the contents to standard output.

A local directory is to be mounted as the root directory (/) inside the QEMU/KVM virtual machine.

Some parts of this tutorial were already discussed in Session 01: Baby Steps.

We will use both the manual approach (make and qemu-system-x86_64 / qemu-guest) and kraft to configure, build and run the application.

Setup

The basic setup is in the work/06-adding-filesystems/ folder in the session directory.

Enter that folder:

$ cd work/06-adding-filesystems/

$ ls -F

guest_fs/ kraft.yaml launch.sh* main.c Makefile Makefile.uk qemu-guest*

The guest_fs/ local directory is to be mounted as the root directory (/) inside the QEMU/KVM virtual machine.

It contains the grass file.

The program (main.c) reads the contents of the /grass file and prints it to standard output.

Makefile.uk lists the main.c file as the application source file to be compiled and linked with Unikraft.

Makefile is used by the manual configuration and build system.

kraft.yaml is used by kraft to configure, build and run the application.

launch.sh is a wrapper script around qemu-system-x86_64 used to manually run the application.

Similarly, qemu-guest is a wrapper script used internally by kraft.

We’ll use it as well to run the application.

If, at any point of this tutorial, something doesn’t work, or you want a quick check, see the reference solution in sol/06-adding-filesystems/ folder in the session directory.

Using the Manual Approach

Firstly, we will use the manual approach to configure, build and run the application.

For filesystem functionalities (opening, reading, writing files) we require a more powerful libc.

newlib is already ported in Unikraft and will do nicely.

For this, we update the LIBS line in the Makefile:

LIBS := $(UK_LIBS)/newlib

Update the UK_ROOT and UK_LIBS variables in the Makefile to point to the folders storing the Unikraft and libraries repositories.

Make sure that both unikraft and newlib repositories are on the staging branch.

Go to each of the two repository folders (unikraft and newlib) and check the current branch:

$ git checkout

Now we need to enable 9pfs and newlib in Unikraft.

To do this, we run:

$ make menuconfig

We need to select the following options, from the Library Configuration menu:

libnewlibvfscore: VFS Core Interfacevfscore: VFS Configuration -> Automatically mount a root filesystem -> Default root filesystem -> 9pfs- For the

Default root device option fill the fs0 string (instead of the default rootfs string).

These configurations will also mark as required 9pfs and uk9p in the menu.

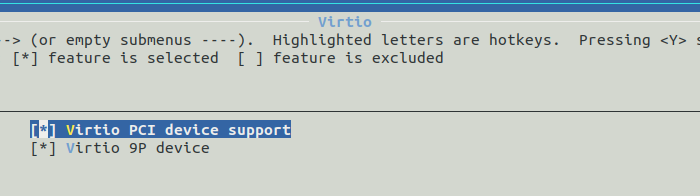

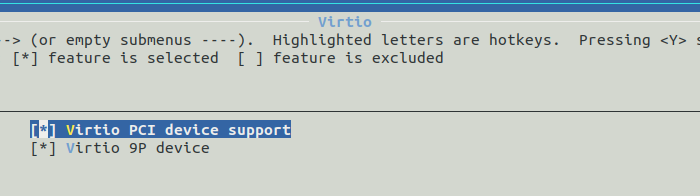

We want to run Unikraft with QEMU/KVM, so we must select KVM guest in the Platform Configuration menu.

For 9PFS we also need to enable, in the KVM guest options menu, Virtio -> Virtio PCI device support.

Save the configuration and exit.

Do a quick check of the configuration in .config by pitting it against the config.sol file in the reference solution:

$ diff -u .config ../../sol/06-adding-filesytstems/config.sol

Differences should be minimal, such as the application identifier.

Build

Build the Unikraft image:

make

Building the Unikraft image will take a while.

It has to pull newlib source code, patch it and then build it, together with the Unikraft source code.

Run with qemu-system-x86_64

To run the Unikraft image with QEMU/KVM, we use the wrapper launch.sh script, that calls qemu-system-x86_64 command with the proper arguments:

$ ./launch.sh ./build/unikraft-kraft-9pfs-issue_kvm-x86_64

[...]

o. .o _ _ __ _

Oo Oo ___ (_) | __ __ __ _ ' _) :_

oO oO ' _ `| | |/ / _)' _` | |_| _)

oOo oOO| | | | | (| | | (_) | _) :_

OoOoO ._, ._:_:_,\_._, .__,_:_, \___)

Tethys 0.5.0~825b115

Hello, world!

File contents: The grass is green!

Bye, world!

A completely manual run would use the command:

$ qemu-system-x86_64 -fsdev local,id=myid,path=guest_fs,security_model=none -device virtio-9p-pci,fsdev=myid,mount_tag=fs0 -kernel build/06-adding-filesystems_kvm-x86_64 -nographic

[...]

Powered by

o. .o _ _ __ _

Oo Oo ___ (_) | __ __ __ _ ' _) :_

oO oO ' _ `| | |/ / _)' _` | |_| _)

oOo oOO| | | | | (| | | (_) | _) :_

OoOoO ._, ._:_:_,\_._, .__,_:_, \___)

Tethys 0.5.0~825b115

Hello, world!

File contents: The grass is green!

Bye, world!

Lets break it down:

-fsdev local,id=myid,path=guest_fs,security_model=none - assign an id (myid) to the guest_fs/ local folder-device virtio-9p-pci,fsdev=myid,mount_tag=fs0 - create a device with the 9pfs type, assign the myid for the -fsdev option and also assign the mount tag that we configured above (fs0)

Unikraft will look after that mount tag when trying to mount the filesystem, so it is important that the mount tag from the configuration is the same as the one given as argument to qemu.-kernel build/06-adding-filesystems_kvm-x86_64 - tells QEMU that it will run a kernel;

if this parameter is omitted, QEMU will think it runs a raw file-nographic - prints the output of QEMU to the standard output, it doesn’t open a graphical window

Run with qemu-guest

qemu-guest is the script used by kraft to run its QEMU/KVM images.

Before looking at the command, take some time to look through the script, and maybe figure out the arguments needed for our task.

To run a QEMU/KVM application using qemu-guest, we use:

$ ./qemu-guest -e guest_fs/ -k build/06-adding-filesystems_kvm-x86_64

If we add the -D option, we can see the qemu-system command generated.

You may get the following error:

[ 0.100664] CRIT: [libvfscore] <rootfs.c @ 122> Failed to mount /: 22

If you do, check that the mount tag in the configuration is the same as the one used by qemu-guest.

qemu-guest will use the tag fs0.

The fs0 tag is hardcoded for qemu-guest (and, thus, for kraft).

This is why we used the fs0 tag when configuring the application with make menuconfig.

Another tag could be used but then we couldn’t run the application with qemu-guest or kraft.

It could only be run by manually using qemu-system-x86_64 with the corresponding arguments.

Using kraft

With kraft, the whole process of configuring, building and running Unikraft can be made easier.

First, we need to replace the TODO lines in kraft.yaml, to reflect our new configuration.

The first set of TODO lines correspond to the Unikraft configuration.

They are used by the kraft configure command.

This is the equivalent of what make menuconfig does.

We need to update those TODO lines with:

kconfig:

- CONFIG_LIBUK9P=y

- CONFIG_LIB9PFS=y

- CONFIG_LIBVFSCORE_AUTOMOUNT_ROOTFS=y

- CONFIG_LIBVFSCORE_ROOTFS_9PFS=y

- CONFIG_LIBVFSCORE_ROOTDEV="fs0"

Then, we need to update the TODO lines for the volume configuration (for mounting the filesystem).

These configuration lines are to be used by the kraft run command.

We need to update those TODO lines with:

volumes:

guest_fs:

driver: 9pfs

In the end, the resulting kraft.yaml file will look like this:

---

specification: '0.5'

name: 06-adding-filesystems

unikraft:

version: 'staging'

kconfig:

- CONFIG_LIBUK9P=y

- CONFIG_LIB9PFS=y

- CONFIG_LIBVFSCORE_AUTOMOUNT_ROOTFS=y

- CONFIG_LIBVFSCORE_ROOTFS_9PFS=y

- CONFIG_LIBVFSCORE_ROOTDEV="fs0"

targets:

- architecture: x86_64

platform: kvm

libraries:

newlib:

version: 'staging'

kconfig:

- CONFIG_LIBNEWLIBC=y

volumes:

guest_fs:

driver: 9pfs

Next, we will make kraft reconfigure our application, using kraft configure.

In our case, nothing should be modified in .config, as we had the same configuration before.

If you get an error like “missing component: newlib”, you need to run kraft list update.

Build

We can now build the application using:

$ kraft build

Run

Run the application using:

$ kraft run

Note: This step is not currently working due to a kraft issue.

You can use the fix described in the issue to make kraft run work.

07. Tutorial: Give the User a Choice

The goal of this exercise is to modify Config.uk, for the Helloworld app, so that the user can choose if the app will display Hello world, or what it reads from the file from the previous exercise.

First of all, we need to add a new configuration in Config.uk.

We will do it like this:

config APPHELLOWORLD_READFILE

bool "Read my file"

default n

help

Reads the file in guest_fs/ and prints its contents,

instead of printing helloworld

After this, we need to modify our code in main.c, to use this configuration option.

#ifndef CONFIG_APPHELLOWORLD_READFILE

printf("Hello world!\n");

#else

FILE *in = fopen("file", "r");

char buffer[100];

fread(buffer, 1, 100, in);

printf("File contents: %s\n", buffer);

fclose(in);

#endif

Note that, for our configuration option APPHELLOWORLD_READFILE, a symbol, CONFIG_APPHELLOWORLD_READFILE, was defined.

We tell GCC that, if that symbol was not defined, it should use the printf("Hello world!\n").

Otherwise, it should use the code written by us.

The last step is to configure the application.

We do this by running make menuconfig, then going to the Application Options and enabling our configuration option.

Now we can build and run the new Unikraft image.

08. Tutorial: Arguments from Command Line

We want to configure the helloworld app to receive command line arguments and then print them.

For this, the Helloworld application already has a configuration option.

Configure the application by running

$ make menuconfig

In the configuration menu, go to Application Options and enable Print arguments.

If we build and run the image now, using qemu-guest, we will see that two arguments are passed to Unikraft: the kernel argument, and a console.

We want to pass it an aditional argument, "foo=bar".

Before this, make sure to reset your configuration, so Unikraft won’t use 9pfs for this task:

$ make clean

Raw qemu command

To send an argument with qemu-system, we use the -append option, like this:

$ qemu-system-x86_64 -kernel build/app-helloworld_kvm-x86_64 -append "console=ttyS0 foo=bar" -serial stdio

qemu-guest script

To send an argument with the qemu-guest script, we use the -a option, like this:

$ ./qemu-guest -k build/app-helloworld_kvm-x86_64 -a "foo=bar"

Kraft

To send an argument while using kraft, run it like this:

$ kraft run "foo=bar"

09. Adding a new source file

Create a new source file for your application, and implement a function that sorts a given integer array, by calling qsort, in turn, from different libc variants, and then prints that array.

For each library, check the size of the Unikraft image.

Enable nolibc and then, as a separate config / build, newlibc, both by using make menuconfig and modifying kraft.yaml.

You will have four different configurations and builds:

- nolibc + kraft

- nolibc + make

- newlibc + kraft

- newlibc + make

10. More Power to the User

Add the possibility to include the new source file only if a configuration option is selected.

Make sure that after this change, the application can still be built and run.

11. Less Power to the User

Delete Config.uk and reconfigure / rebuild the app.

What happens when you run the app?

12. Give Us Feedback

We want to know how to make the next sessions better.

Fo this we need your feedback.

5 - Session 03: Debugging in Unikraft

Because unikernels aim to be a more efficient method of virtualization, this can sometimes cause problems.

This session aims to familiarize you to solve any problem encountered during the development using GDB and Tracepoints.

Reminders

At this stage, you should be familiar with the steps of configuring, building and running any application within Unikraft and know the main parts of the architecture.

Below you can see a list of the commands you have used so far.

| Command | Description |

|---|

kraft list | Get a list of all components that are available for use with kraft |

kraft up -t <appname> <your_appname> | Download, configure and build existing components into unikernel images |

kraft run | Run resulting unikernel image |

kraft init -t <appname> | Initialize the application |

kraft configure | Configure platform and architecture (interactive) |

kraft configure -p <plat> -m <arch> | Configure platform and architecture (non-interactive) |

kraft build | Build the application |

kraft clean | Clean the application |

make menuconfig | Configure application through the main menu |

Support Files

Session support files are available in the repository.

If you already cloned the repository, update it and enter the session directory:

$ cd path/to/repository/clone

$ git pull --rebase

$ cd content/en/docs/sessions/03-debugging/

$ ls

demo/ images/ index.md sol/ work/

If you haven’t cloned the repository yet, clone it and enter the session directory:

$ git clone https://github.com/unikraft/summer-of-code-2021

$ cd summer-of-code-2021/content/en/docs/sessions/03-debugging/

$ ls

demo/ images/ index.md sol/ work/

Debugging

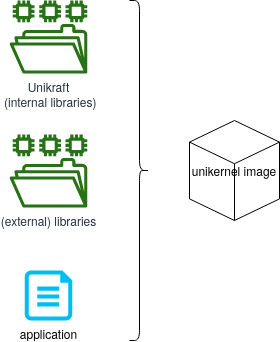

Contrary to popular belief, debugging a unikernel is in fact simpler than debugging a standard operating system.

Since the application and OS are linked into a single binary, debuggers can be used on the running unikernel to debug both application and OS code at the same time.

A couple of hints that should help starting:

- In the configuration menu (presented with

make menuconfig), under Build Options make sure that Drop unused functions and data is unselected.

This prevents Unikraft from removing unused symbols from the final image and, if enabled, might hide missing dependencies during development. - Use

make V=1 to see verbose output for all of the commands being executed during the build.

If the compilation for a particular file is breaking and you would like to understand why (e.g., perhaps the include paths are wrong), you can debug things by adding the -E flag to the command, removing the -o [objname], and redirecting the output to a file which you can then inspect. - Check out the targets under

Miscellaneous when typing make help, these may come in handy.

For instance, make print-vars enables inspecting at the value of a particular variable in Makefile.uk. - Use the individual

make clean-[libname] targets to ensure that you’re cleaning only the part of Unikraft you’re working on and not all the libraries that it may depend on.

This will speed up the build and thus the development process. - Use the Linux user space platform target (

linuxu) for quicker and easier development and debugging.

Using GDB

The build system always creates two image files for each selected platform:

- one that includes debugging information and symbols (

.dbg file extension) - one that does not

Before using GDB, go to the configuration menu under Build Options and select a Debug information level that is bigger than 0.

We recommend 3, the highest level.

Once set, save the configuration and build your images.

Linuxu

For the Linux user space target (linuxu) simply point GDB to the resulting debug image, for example:

$ gdb build/app-helloworld_linuxu-x86_64.dbg

KVM

For KVM, you can start the guest with the kernel image that includes debugging information, or the one that does not.

We recommend creating the guest in a paused state (the -S option):

$ qemu-system-x86_64 -s -S -cpu host -enable-kvm -m 128 -nodefaults -no-acpi -display none -serial stdio -device isa-debug-exit -kernel build/app-helloworld_kvm-x86_64.dbg -append verbose

Note that the -s parameter is shorthand for -gdb tcp::1234.

To avoid this long qemu-system-x86 command with a lot of arguments, we can use qemu-guest.

$ qemu-guest -P -g 1234 -k build/app-helloworld_kvm-x86_64.dbg

Now connect GDB by using the debug image with:

$ gdb --eval-command="target remote :1234" build/app-helloworld_kvm-x86_64.dbg

Unless you’re debugging early boot code (until _libkvmplat_start32), you’ll need to set a hardware break point:

Hardware breakpoints have the same effect as the common software breakpoints you are used to, but they are different in the implementation.

As the name suggests, hardware breakpoints are based on direct hardware support.

This may limit the number of breakpoints you can set, but makes them especially useful when debugging kernel code.

hbreak [location]

continue

We’ll now need to set the right CPU architecture:

disconnect

set arch i386:x86-64:intel

And reconnect:

tar remote localhost:1234

You can now run continue and debug as you would normally.

Xen

Running Unikraft in Xen

For Xen you first need to create a VM configuration (save it under helloworld.cfg):

name = 'helloworld'

vcpus = '1'

memory = '4'

kernel = 'build/app-helloworld_xen-x86_64.dbg'

Start the virtual machine with:

$ xl create -c helloworld.cfg

For Xen the process is slightly more complicated and depends on Xen’s gdbsx tool.

First you’ll need to make sure you have the tool on your system.

Here are sample instructions to do that:

[get Xen sources]

$ ./configure

$ cd tools/debugger/gdbsx/ && make

The gdbsx tool will then be under tools/debugger.

For the actual debugging, you first need to create the guest (we recommend paused state: xl create -p), note its domain ID (xl list) and execute the debugger backend:

$ gdbsx -a [DOMAIN ID] 64 [PORT]

You can then connect GDB within a separate console and you’re ready to debug:

$ gdb --eval-command="target remote :[PORT]" build/helloworld_xen-x86_64.dbg

You should be also able to use the debugging file (build/app-helloworld_xen-x86_64.dbg) for GDB instead passing the kernel image.

Tracepoints

Because Unikraft needs a tracing and performance measurement system, one method to do this is using Unikrat’s tracepoint system.

A tracepoint provides a hook to call a function that you can provide at runtime.

You can put tracepoints at important locations in the code.

They are lightweight hooks that can pass an arbitrary number of parameters, which prototypes are described in a tracepoint declaration placed in a header file.

Dependencies

We provide some tools to read and export trace data that were collected with Unikraft’s tracepoint system.

The tools depend on Python3, as well as the click and tabulate modules.

You can install them by running (Debian/Ubuntu):

sudo apt-get install python3 python3-click python3-tabulate

Enabling Tracing

Tracepoints are provided by lib/ukdebug.

To enable Unikraft to collect trace data, enable the option CONFIG_LIBUKDEBUG_TRACEPOINTS in your configuration (via make menuconfig under Library Configuration -> ukdebug -> Enable tracepoints).

The configuration option CONFIG_LIBUKDEBUG_ALL_TRACEPOINTS activates all existing tracepoints.

Because tracepoints may noticeably affect performance, you can alternatively enable tracepoints only for compilation units that you are interested in.

This can be done with the Makefile.uk of each library.

# Enable tracepoints for a whole library

LIBNAME_CFLAGS-y += -DUK_DEBUG_TRACE

LIBNAME_CXXFLAGS-y += -DUK_DEBUG_TRACE

# Alternatively, enable tracepoints of source files you are interested in

LIBNAME_FILENAME1_FLAGS-y += -DUK_DEBUG_TRACE

LIBNAME_FILENAME2_FLAGS-y += -DUK_DEBUG_TRACE

This can also be done by defining UK_DEBUG_TRACE in the head of your source files.

Please make sure that UK_DEBUG_TRACE is defined before <uk/trace.h> is included:

#ifndef UK_DEBUG_TRACE

#define UK_DEBUG_TRACE

#endif

#include <uk/trace.h>

As soon as tracing is enabled, Unikraft will store samples of each enabled tracepoint into an internal trace buffer.

Currently this is not a circular buffer.

This means that as soon as it is full, Unikraft will stop collecting further samples.

Creating Tracepoints

Instrumenting your code with tracepoints is done by two steps.

First, you define and register a tracepoint handler with the UK_TRACEPOINT() macro.

Second, you place calls to the generated handler at those places in your code where your want to trace an event:

#include <uk/trace.h>

UK_TRACEPOINT(trace_vfs_open, "\"%s\" 0x%x 0%0o", const char*, int, mode_t);

int open(const char *pathname, int flags, ...)

{

trace_vfs_open(pathname, flags, mode);

/* lots of cool stuff */

return 0;

}

UK_TRACEPOINT(trace_name, fmt, type1, type2, ... typeN) generates the handler trace_name() (static function).

It will accept up to 7 parameters of type type1, type2, etc.

The given format string fmt is a printf-style format which will be used to create meaningful messages based on the collected trace parameters.

This format string is only kept in the debug image and is used by the tools to read and parse the trace data.

Unikraft’s trace buffer stores for each sample a timestamp, the name of the tracepoint, and the given parameters.

Reading Trace Data

Unikraft is storing trace data to an internal buffer that resides in the guest’s main memory.

You can use GDB to read and export it.

For this purpose, you will need to load the uk-gdb.py helper script into your GDB session.

It adds additional commands that allow you to list and store the trace data.

We recommend to automatically load the script to GDB.

For this purpose, add the following line to your ~/.gdbinit:

source /path/to/your/build/uk-gdb.py

In order to collect the data, open GDB with the debug image and connect to your Unikraft instance as described in Section Using GDB:

$ gdb build/app-helloworld_linuxu-x86_64.dbg

The .dbg image is required because it contains offline data needed for parsing the trace buffer.

As soon as you let run your guest, samples should be stored in Unikraft’s trace buffer.

You can print them by issuing the GDB command uk trace:

(gdb) uk trace

Alternatively, you can save all trace data to disk with uk trace save <filename>:

(gdb) uk trace save traces.dat

It may make sense to connect with GDB after the guest execution has been finished (and the trace buffer got filled).